ZUNA is a 380M-parameter BCI foundation model for EEG data, a significant milestone in the development of noninvasive thought-to-text. ZUNA reconstructs, denoises, and upsamples EEG data across arbitrary channel layouts and is built for researchers, clinicians, and BCI developers using real world data.

Zyphra is excited to announce ZUNA, our first foundation model trained on brain data. We believe thought-to-text will be the next major modality beyond language, audio, and vision enabled by noninvasive brain–computer interfaces (BCIs).

ZUNA is an early effort to build general foundation models of neural signals that can be used to understand and decode brain states. ZUNA is a key component in our mission to build human-aligned superintelligence. Over time, we see these models forming the foundation of thought-to-text agentic systems.

ZUNA is a 380M-parameter diffusion autoencoder trained to denoise, reconstruct, and upsample scalp-EEG signals. Given a subset of EEG channels, ZUNA can:

EEG data is prevalent in clinics, research labs, and increasingly consumer devices. Yet unlike text, images, or audio, the EEG domain still lacks general and powerful foundation models.

Data fragmentation is a major reason for the lack of EEG foundation models. EEG datasets are typically small, collected under different protocols, and distributed across many institutions. This makes it difficult to aggregate data at the scale that has powered progress in other modalities.

And yet, there is clearly immense information and structure contained in EEG signals, which could power downstream tasks like understanding emotional and attentional states to decoding thoughts and dreams.

We aim to apply the classical deep learning methodology of creating general pretrained foundation models upon this data, with the goal of discovering generalizable representations underlying these signals and developing a foundation model that will translate thought-to-text.

Zyphra is excited to announce ZUNA, our first foundation model trained on brain data. We believe thought-to-text will be the next major modality beyond language, audio, and vision enabled by noninvasive brain–computer interfaces (BCIs).

ZUNA is an early effort to build general foundation models of neural signals that can be used to understand and decode brain states. ZUNA is a key component in our mission to build human-aligned superintelligence. Over time, we see these models forming the foundation of thought-to-text agentic systems.

ZUNA is a 380M-parameter diffusion autoencoder trained to denoise, reconstruct, and upsample scalp-EEG signals. Given a subset of EEG channels, ZUNA can:

EEG data is prevalent in clinics, research labs, and increasingly consumer devices. Yet unlike text, images, or audio, the EEG domain still lacks general and powerful foundation models.

Data fragmentation is a major reason for the lack of EEG foundation models. EEG datasets are typically small, collected under different protocols, and distributed across many institutions. This makes it difficult to aggregate data at the scale that has powered progress in other modalities.

And yet, there is clearly immense information and structure contained in EEG signals, which could power downstream tasks like understanding emotional and attentional states to decoding thoughts and dreams.

We aim to apply the classical deep learning methodology of creating general pretrained foundation models upon this data, with the goal of discovering generalizable representations underlying these signals and developing a foundation model that will translate thought-to-text.

EEG recordings are frequently degraded by channel dropouts, motion-related artifacts, and limited channel counts typical of academic and consumer-grade hardware.

The standard approach for handling missing or noisy channels is spherical spline interpolation, which is the default method in the widely-used MNE package1. While simple and fast, this method has only a surface-level understanding of EEG structure and can result in poor or misleading reconstructions especially as channel degradation increases.

ZUNA replaces spherical spline interpolation with a learned, data-driven approach. By leveraging representations learned across a large and diverse EEG corpus, ZUNA can reconstruct signals in a way that captures the underlying patterns in brain activity rather than simple spatial smoothing.

This is not just exploratory research; it solves concrete, everyday problems faced by anyone working with EEG.

EEG recordings are frequently degraded by channel dropouts, motion-related artifacts, and limited channel counts typical of academic and consumer-grade hardware.

The standard approach for handling missing or noisy channels is spherical spline interpolation, which is the default method in the widely-used MNE package1. While simple and fast, this method has only a surface-level understanding of EEG structure and can result in poor or misleading reconstructions especially as channel degradation increases.

ZUNA replaces spherical spline interpolation with a learned, data-driven approach. By leveraging representations learned across a large and diverse EEG corpus, ZUNA can reconstruct signals in a way that captures the underlying patterns in brain activity rather than simple spatial smoothing.

This is not just exploratory research; it solves concrete, everyday problems faced by anyone working with EEG.

Zyphra is excited to announce ZUNA, our first foundation model trained on brain data. We believe thought-to-text will be the next major modality beyond language, audio, and vision enabled by noninvasive brain–computer interfaces (BCIs).

ZUNA is an early effort to build general foundation models of neural signals that can be used to understand and decode brain states. ZUNA is a key component in our mission to build human-aligned superintelligence. Over time, we see these models forming the foundation of thought-to-text agentic systems.

EEG recordings are frequently degraded by channel dropouts, motion-related artifacts, and limited channel counts typical of academic and consumer-grade hardware.

The standard approach for handling missing or noisy channels is spherical spline interpolation, which is the default method in the widely-used MNE package1. While simple and fast, this method has only a surface-level understanding of EEG structure and can result in poor or misleading reconstructions especially as channel degradation increases.

ZUNA replaces spherical spline interpolation with a learned, data-driven approach. By leveraging representations learned across a large and diverse EEG corpus, ZUNA can reconstruct signals in a way that captures the underlying patterns in brain activity rather than simple spatial smoothing.

This is not just exploratory research; it solves concrete, everyday problems faced by anyone working with EEG.

ZUNA is a 380M-parameter diffusion autoencoder trained to denoise, reconstruct, and upsample scalp-EEG signals. Given a subset of EEG channels, ZUNA can:

EEG recordings are frequently degraded by channel dropouts, motion-related artifacts, and limited channel counts typical of academic and consumer-grade hardware.

The standard approach for handling missing or noisy channels is spherical spline interpolation, which is the default method in the widely-used MNE package1. While simple and fast, this method has only a surface-level understanding of EEG structure and can result in poor or misleading reconstructions especially as channel degradation increases.

ZUNA replaces spherical spline interpolation with a learned, data-driven approach. By leveraging representations learned across a large and diverse EEG corpus, ZUNA can reconstruct signals in a way that captures the underlying patterns in brain activity rather than simple spatial smoothing.

This is not just exploratory research; it solves concrete, everyday problems faced by anyone working with EEG.

EEG recordings are frequently degraded by channel dropouts, motion-related artifacts, and limited channel counts typical of academic and consumer-grade hardware.

The standard approach for handling missing or noisy channels is spherical spline interpolation, which is the default method in the widely-used MNE package1. While simple and fast, this method has only a surface-level understanding of EEG structure and can result in poor or misleading reconstructions especially as channel degradation increases.

ZUNA replaces spherical spline interpolation with a learned, data-driven approach. By leveraging representations learned across a large and diverse EEG corpus, ZUNA can reconstruct signals in a way that captures the underlying patterns in brain activity rather than simple spatial smoothing.

This is not just exploratory research; it solves concrete, everyday problems faced by anyone working with EEG.

EEG recordings are frequently degraded by channel dropouts, motion-related artifacts, and limited channel counts typical of academic and consumer-grade hardware.

The standard approach for handling missing or noisy channels is spherical spline interpolation, which is the default method in the widely-used MNE package1. While simple and fast, this method has only a surface-level understanding of EEG structure and can result in poor or misleading reconstructions especially as channel degradation increases.

ZUNA replaces spherical spline interpolation with a learned, data-driven approach. By leveraging representations learned across a large and diverse EEG corpus, ZUNA can reconstruct signals in a way that captures the underlying patterns in brain activity rather than simple spatial smoothing.

This is not just exploratory research; it solves concrete, everyday problems faced by anyone working with EEG.

ZUNA is a foundation model purpose-built to address the most persistent and costly limitations in EEG research and development. ZUNA enables the following capabilities:

EEG datasets often contain sessions that are partially unusable due to corrupted channels or intermittent dropout. These sessions are frequently discarded, reducing sample size and statistical power. ZUNA enables researchers to recover usable signals from such recordings, effectively increasing dataset size without additional data collection.

Many modern EEG devices trade spatial resolution, the number of electrodes on the device, for accessibility. ZUNA allows low-channel systems to be mapped into a higher-resolution signal space, narrowing the gap between consumer-grade and lab-grade recordings and enabling analyses that would otherwise be infeasible.

Traditional EEG analysis pipelines assume fixed montages (e.g., 10–20 or 10–10). ZUNA operates directly on electrode coordinates, allowing it to generalize across arbitrary channel counts and layouts. This makes cross-dataset and cross-device analyses substantially easier.

Zyphra is excited to announce ZUNA, our first foundation model trained on brain data. We believe thought-to-text will be the next major modality beyond language, audio, and vision enabled by noninvasive brain–computer interfaces (BCIs).

ZUNA is an early effort to build general foundation models of neural signals that can be used to understand and decode brain states. ZUNA is a key component in our mission to build human-aligned superintelligence. Over time, we see these models forming the foundation of thought-to-text agentic systems.

ZUNA is a 380M-parameter diffusion autoencoder trained to denoise, reconstruct, and upsample scalp-EEG signals. Given a subset of EEG channels, ZUNA can:

EEG data is prevalent in clinics, research labs, and increasingly consumer devices. Yet unlike text, images, or audio, the EEG domain still lacks general and powerful foundation models.

Data fragmentation is a major reason for the lack of EEG foundation models. EEG datasets are typically small, collected under different protocols, and distributed across many institutions. This makes it difficult to aggregate data at the scale that has powered progress in other modalities.

And yet, there is clearly immense information and structure contained in EEG signals, which could power downstream tasks like understanding emotional and attentional states to decoding thoughts and dreams.

We aim to apply the classical deep learning methodology of creating general pretrained foundation models upon this data, with the goal of discovering generalizable representations underlying these signals and developing a foundation model that will translate thought-to-text.

EEG recordings are frequently degraded by channel dropouts, motion-related artifacts, and limited channel counts typical of academic and consumer-grade hardware.

The standard approach for handling missing or noisy channels is spherical spline interpolation, which is the default method in the widely-used MNE package1. While simple and fast, this method has only a surface-level understanding of EEG structure and can result in poor or misleading reconstructions especially as channel degradation increases.

ZUNA replaces spherical spline interpolation with a learned, data-driven approach. By leveraging representations learned across a large and diverse EEG corpus, ZUNA can reconstruct signals in a way that captures the underlying patterns in brain activity rather than simple spatial smoothing.

This is not just exploratory research; it solves concrete, everyday problems faced by anyone working with EEG.

ZUNA is a foundation model purpose-built to address the most persistent and costly limitations in EEG research and development. ZUNA enables the following capabilities:

EEG datasets often contain sessions that are partially unusable due to corrupted channels or intermittent dropout. These sessions are frequently discarded, reducing sample size and statistical power. ZUNA enables researchers to recover usable signals from such recordings, effectively increasing dataset size without additional data collection.

Many modern EEG devices trade spatial resolution, the number of electrodes on the device, for accessibility. ZUNA allows low-channel systems to be mapped into a higher-resolution signal space, narrowing the gap between consumer-grade and lab-grade recordings and enabling analyses that would otherwise be infeasible.

Traditional EEG analysis pipelines assume fixed montages (e.g., 10–20 or 10–10). ZUNA operates directly on electrode coordinates, allowing it to generalize across arbitrary channel counts and layouts. This makes cross-dataset and cross-device analyses substantially easier.

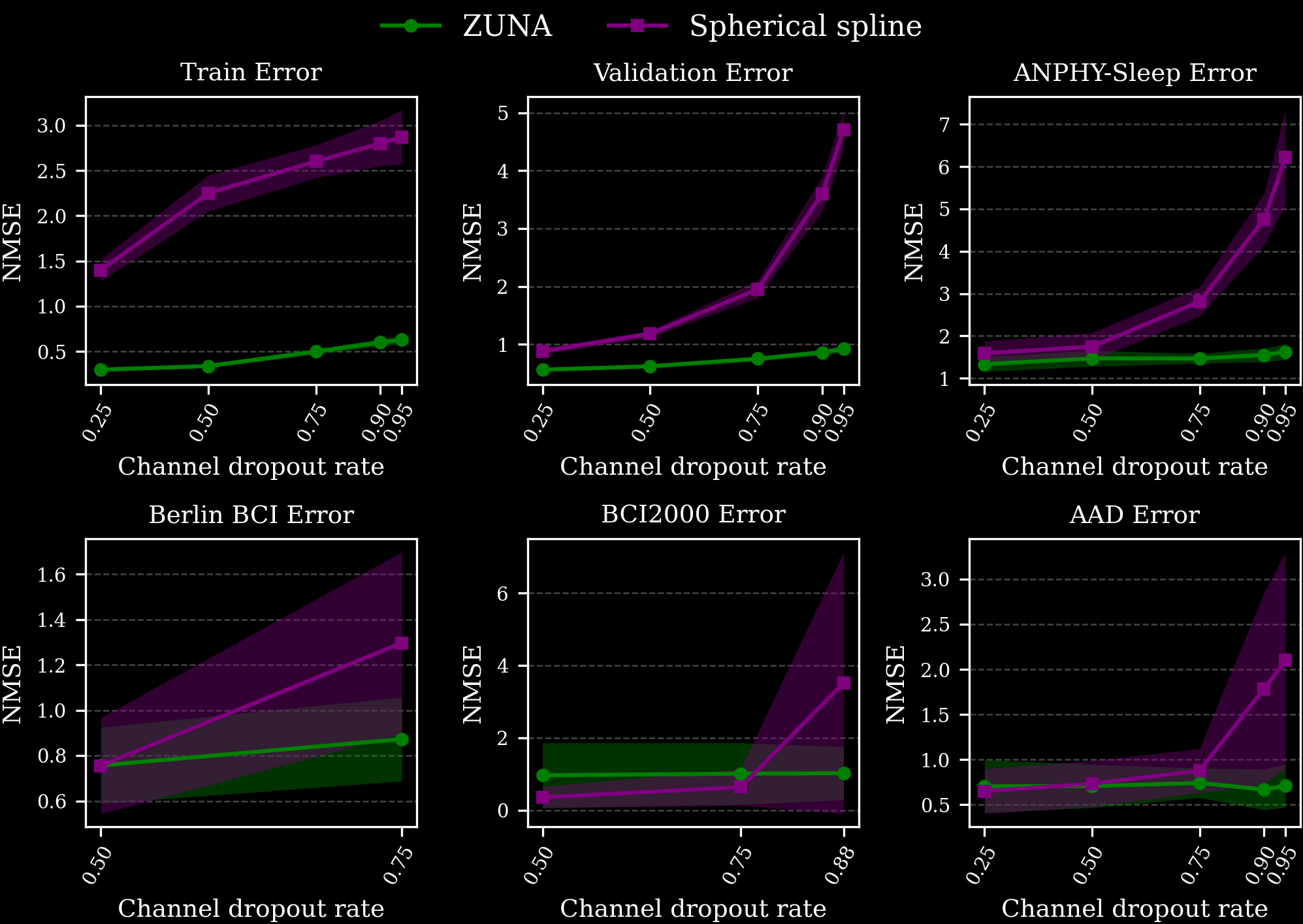

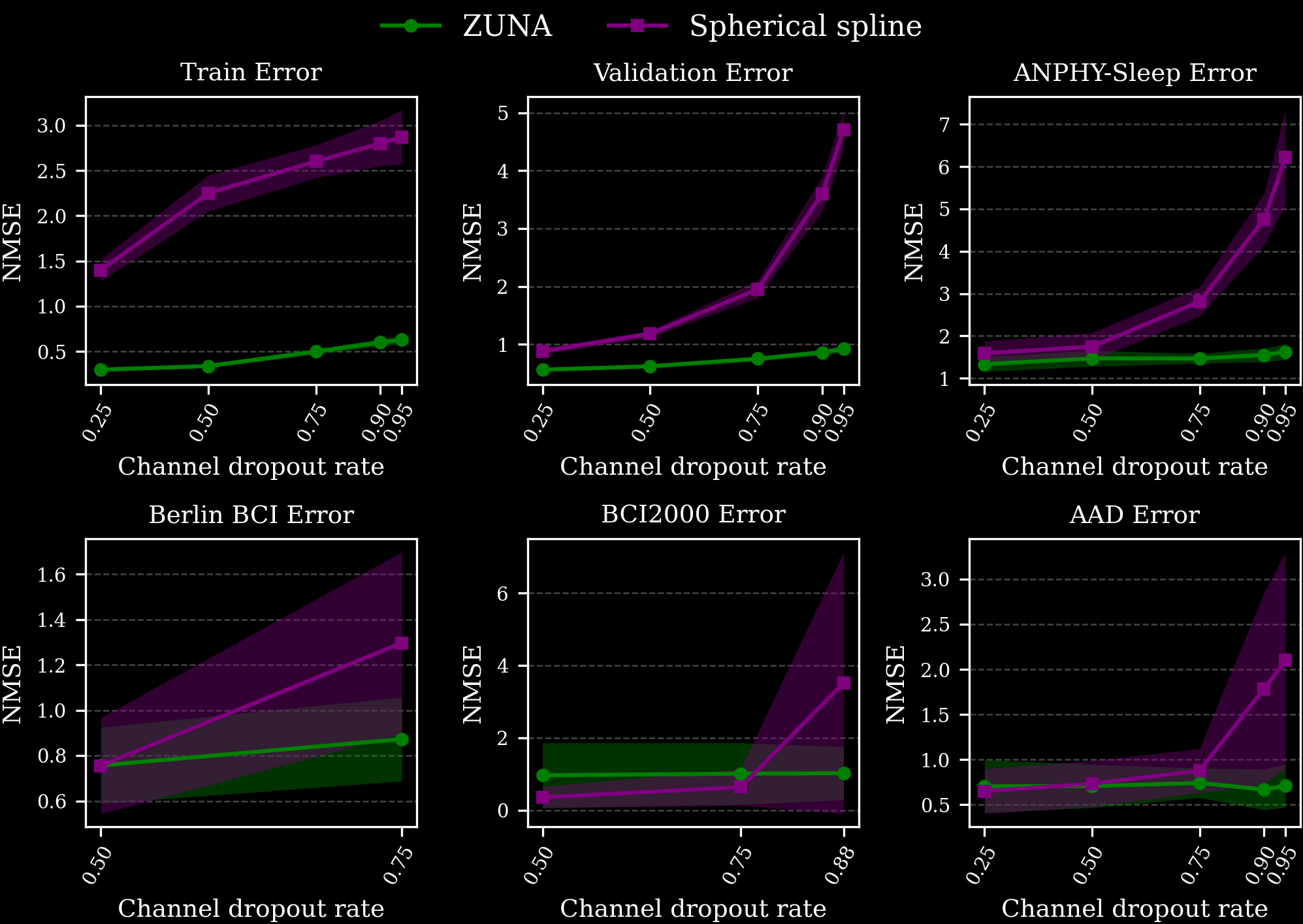

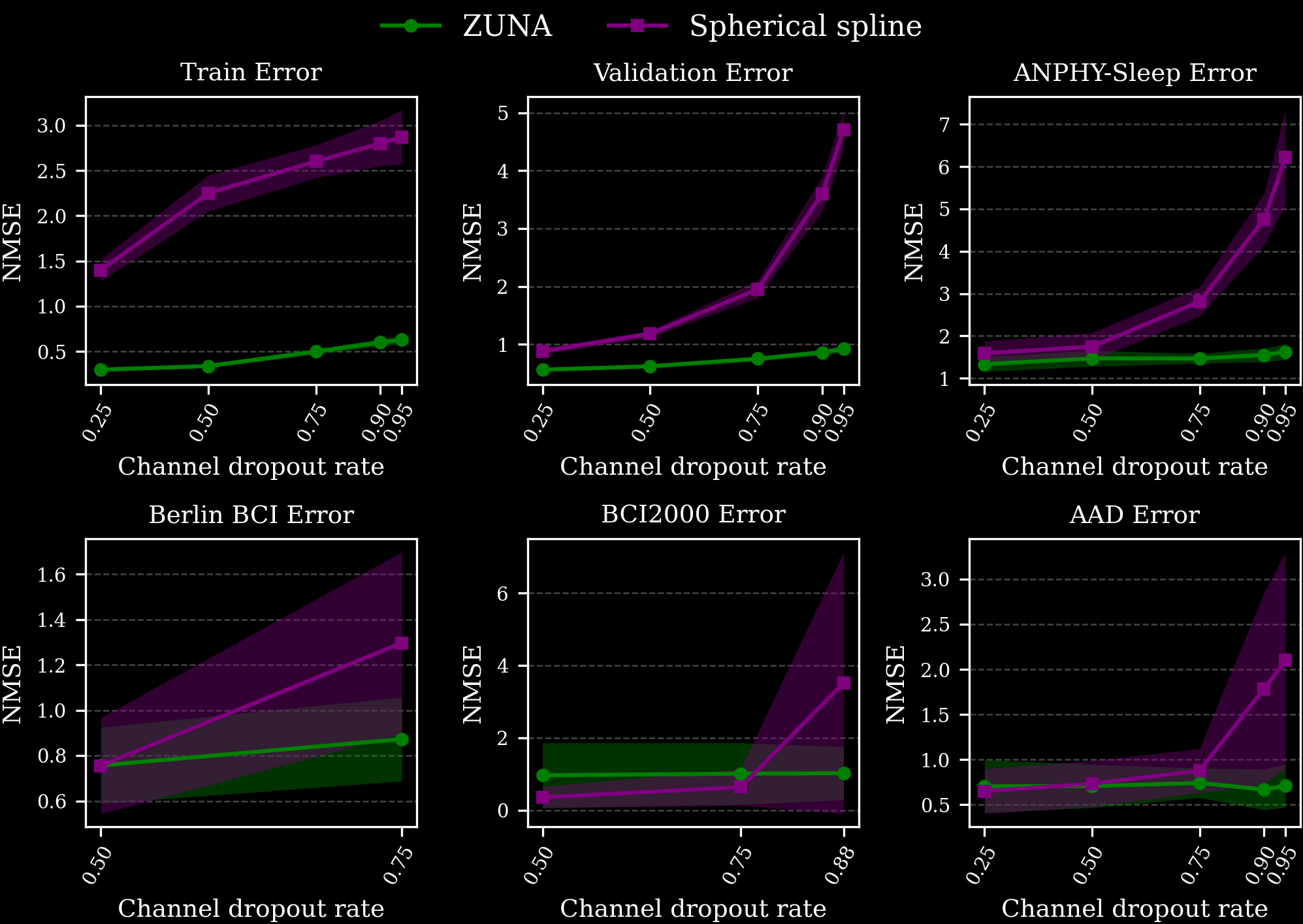

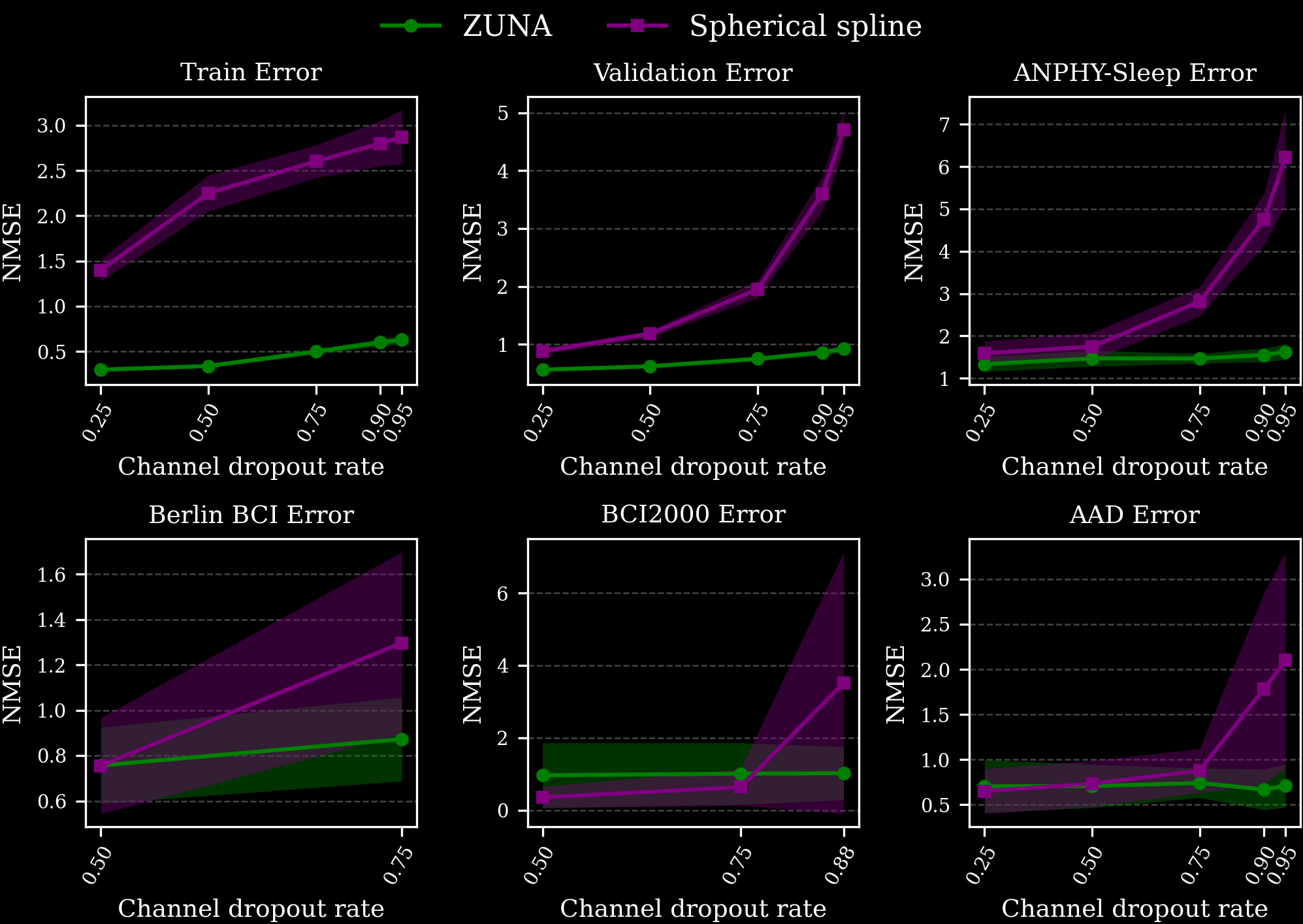

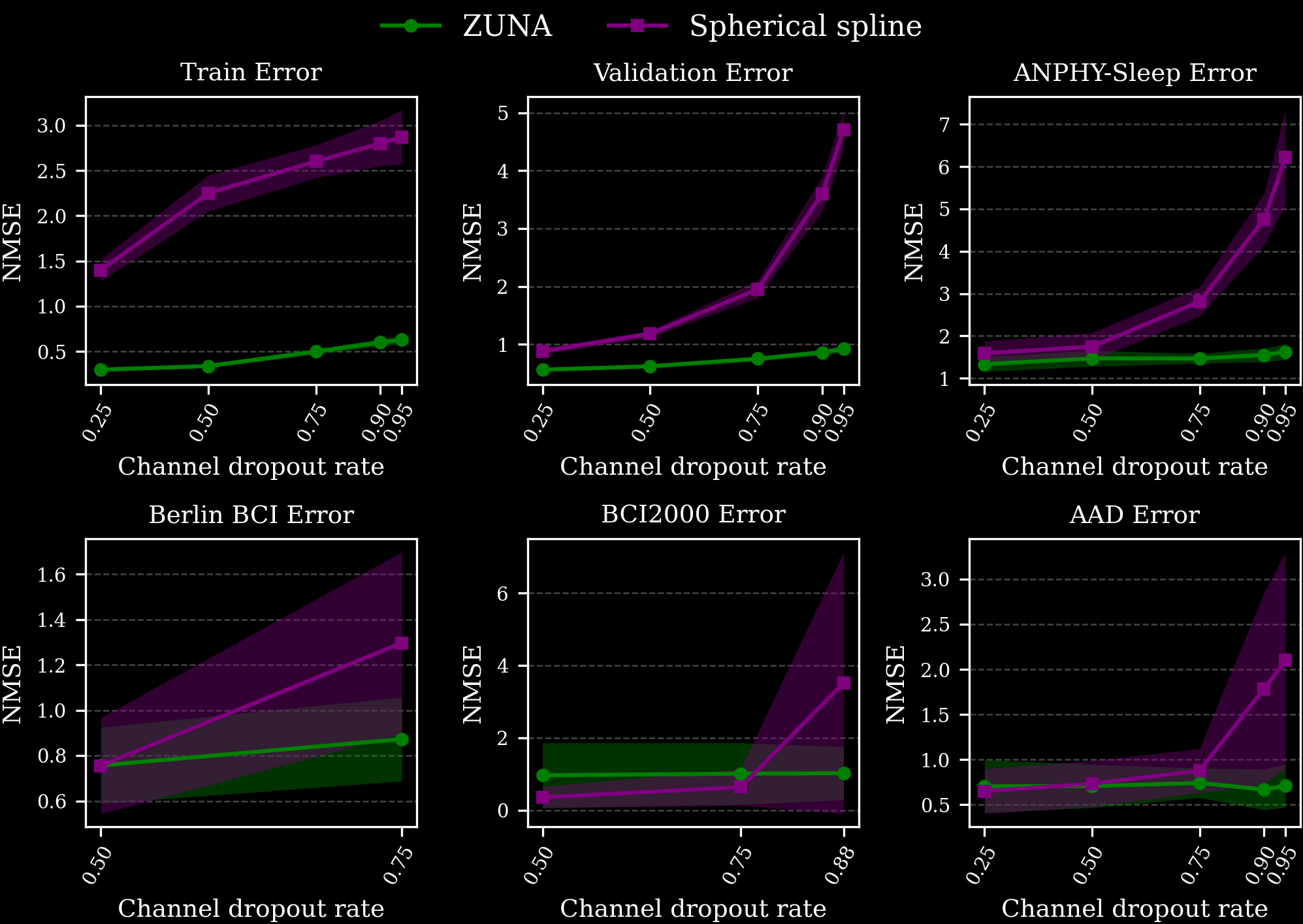

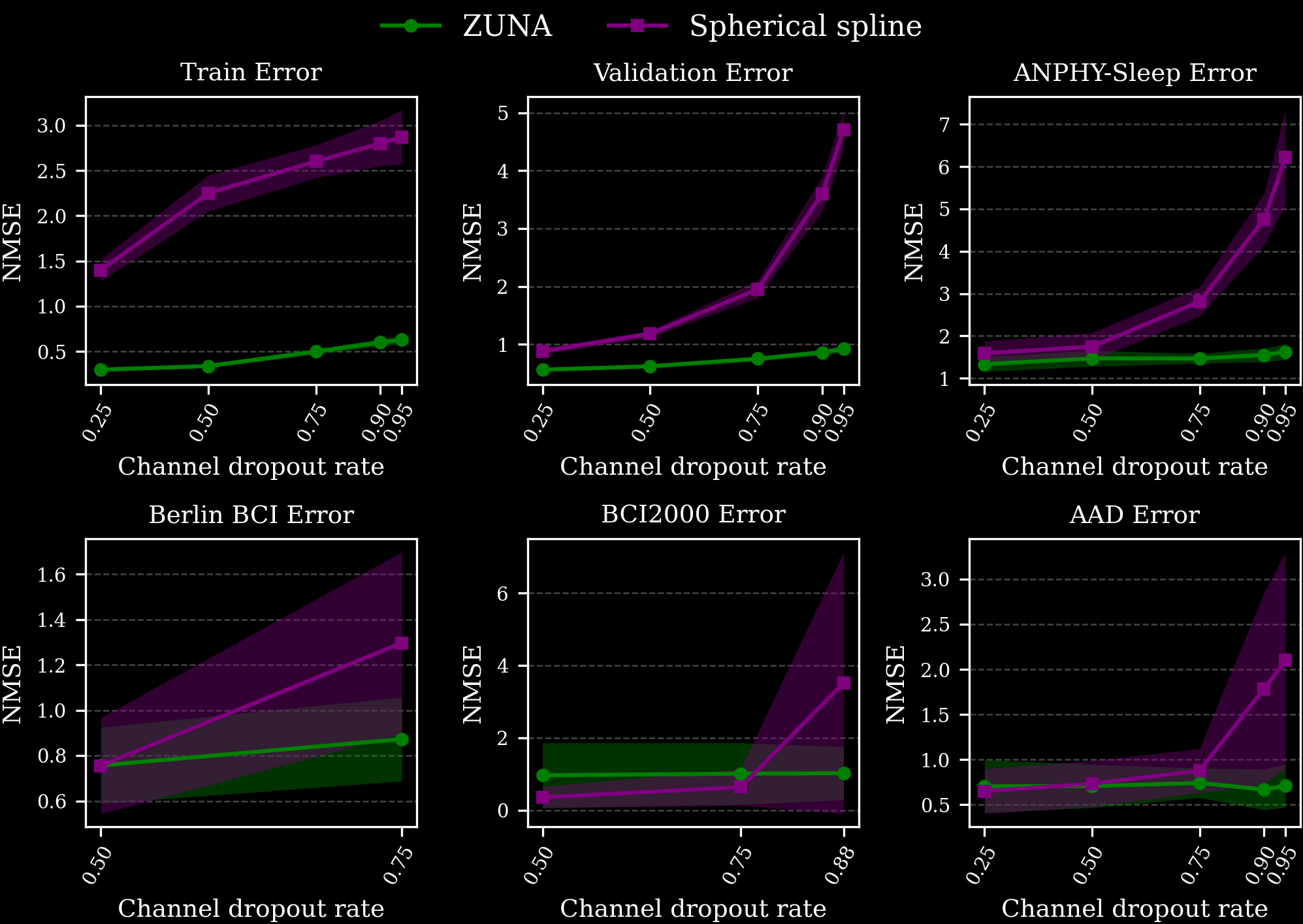

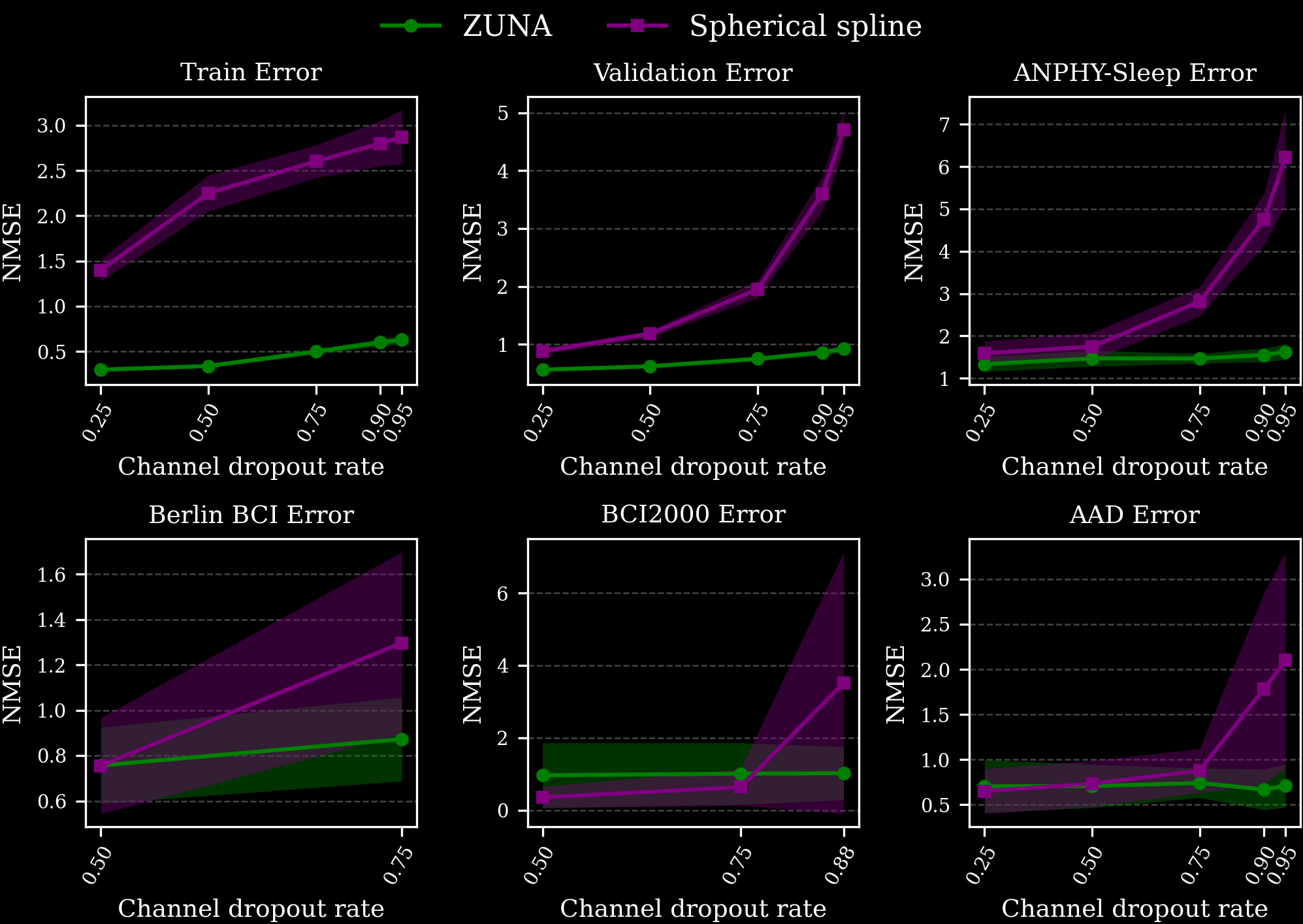

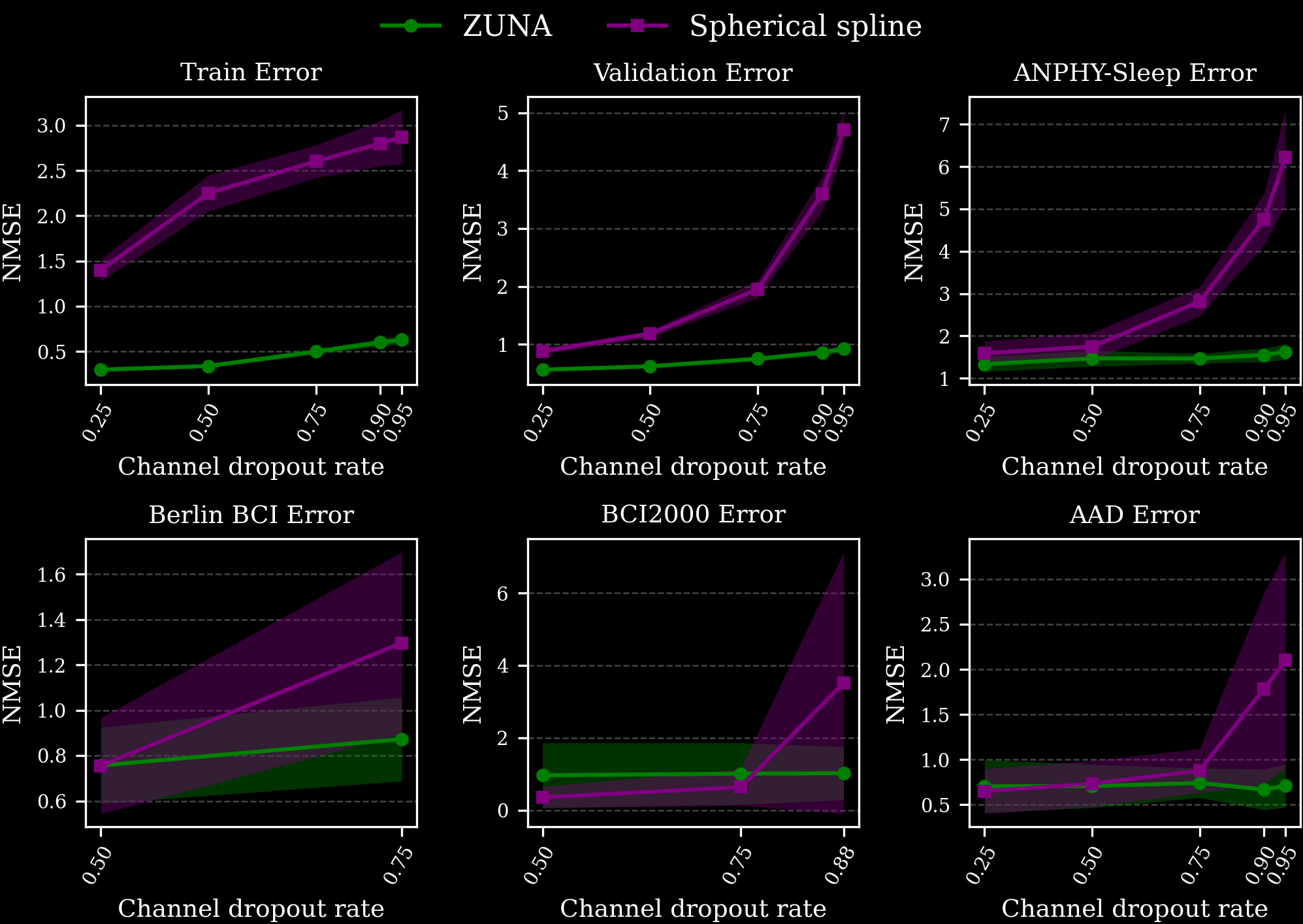

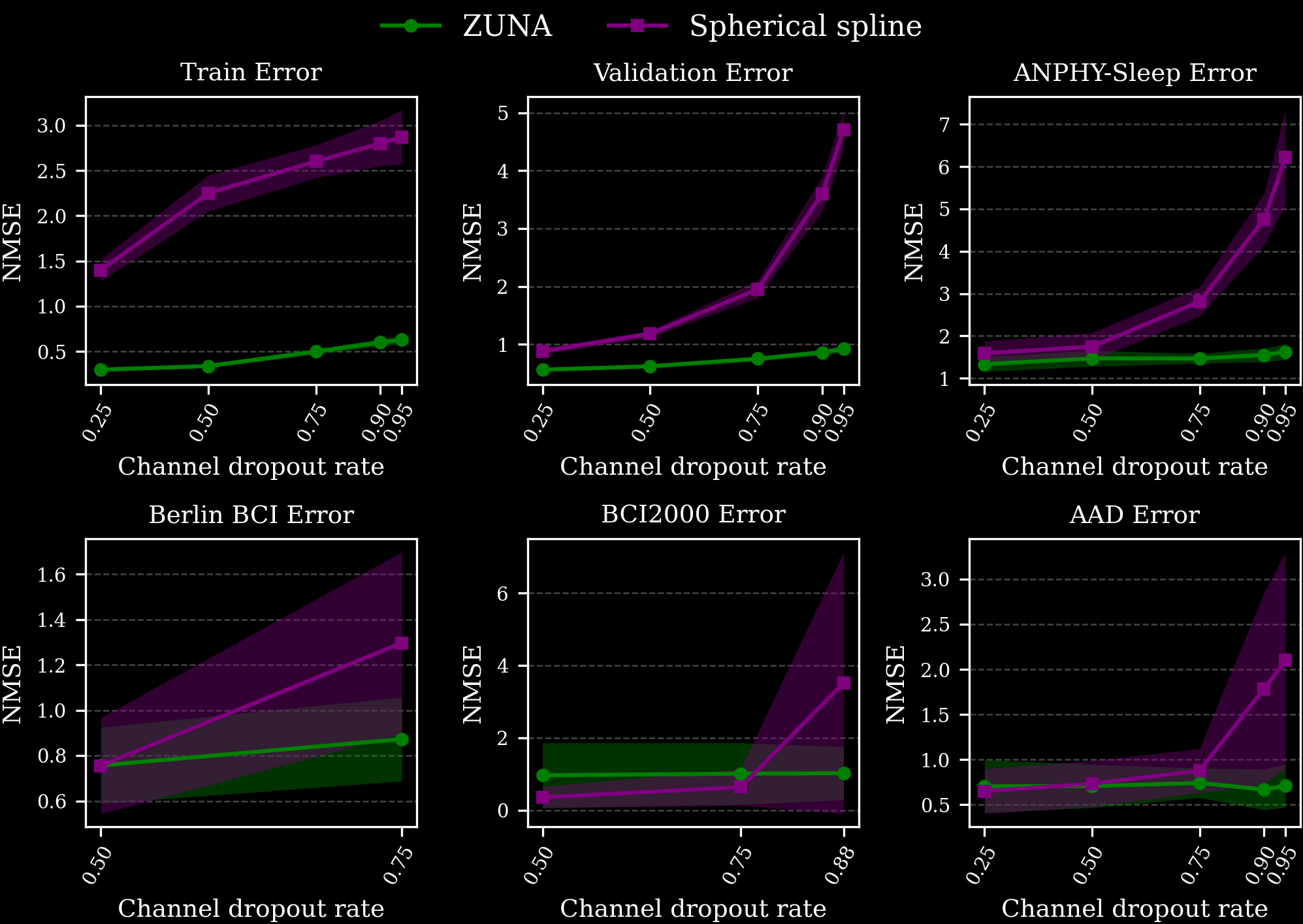

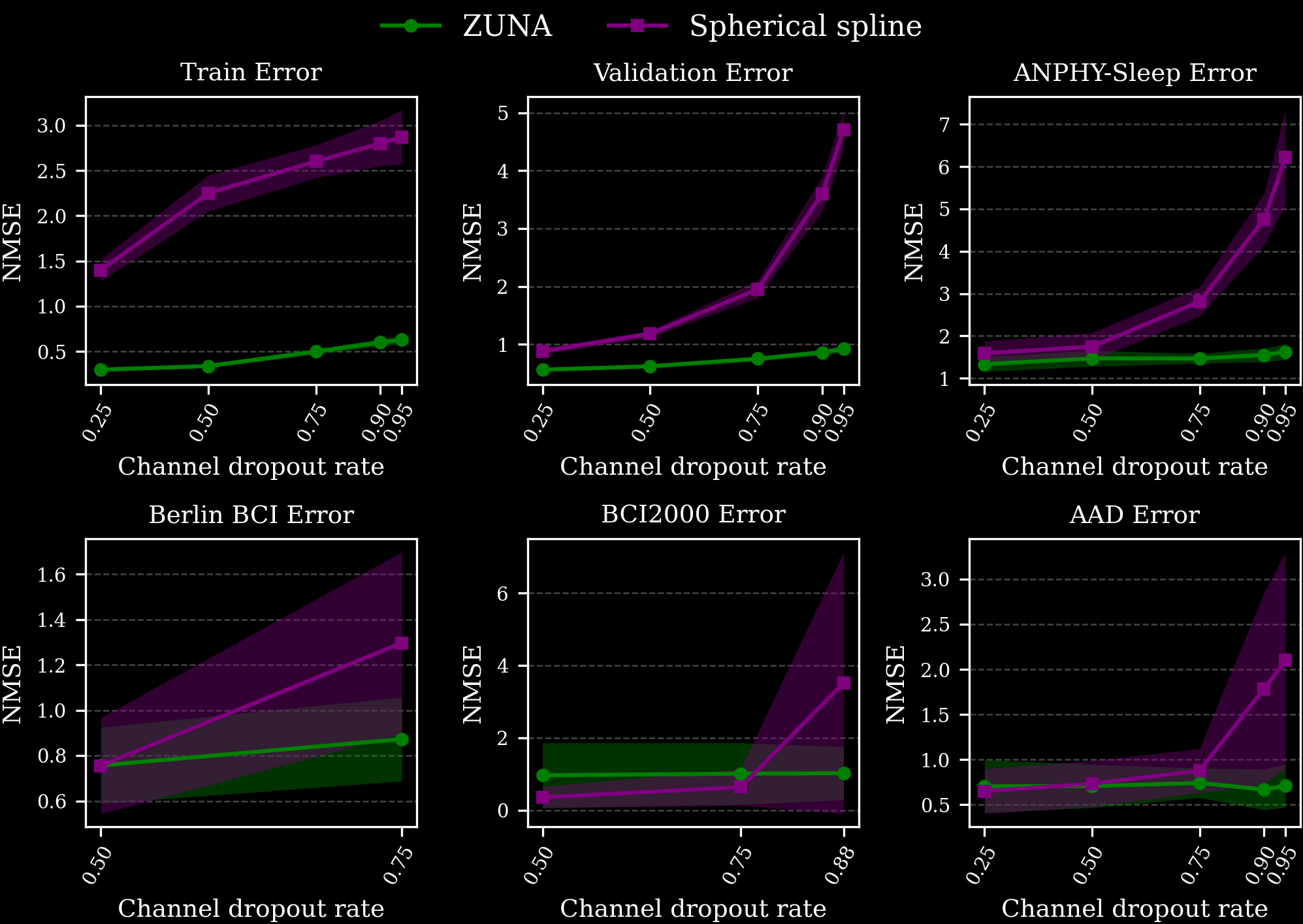

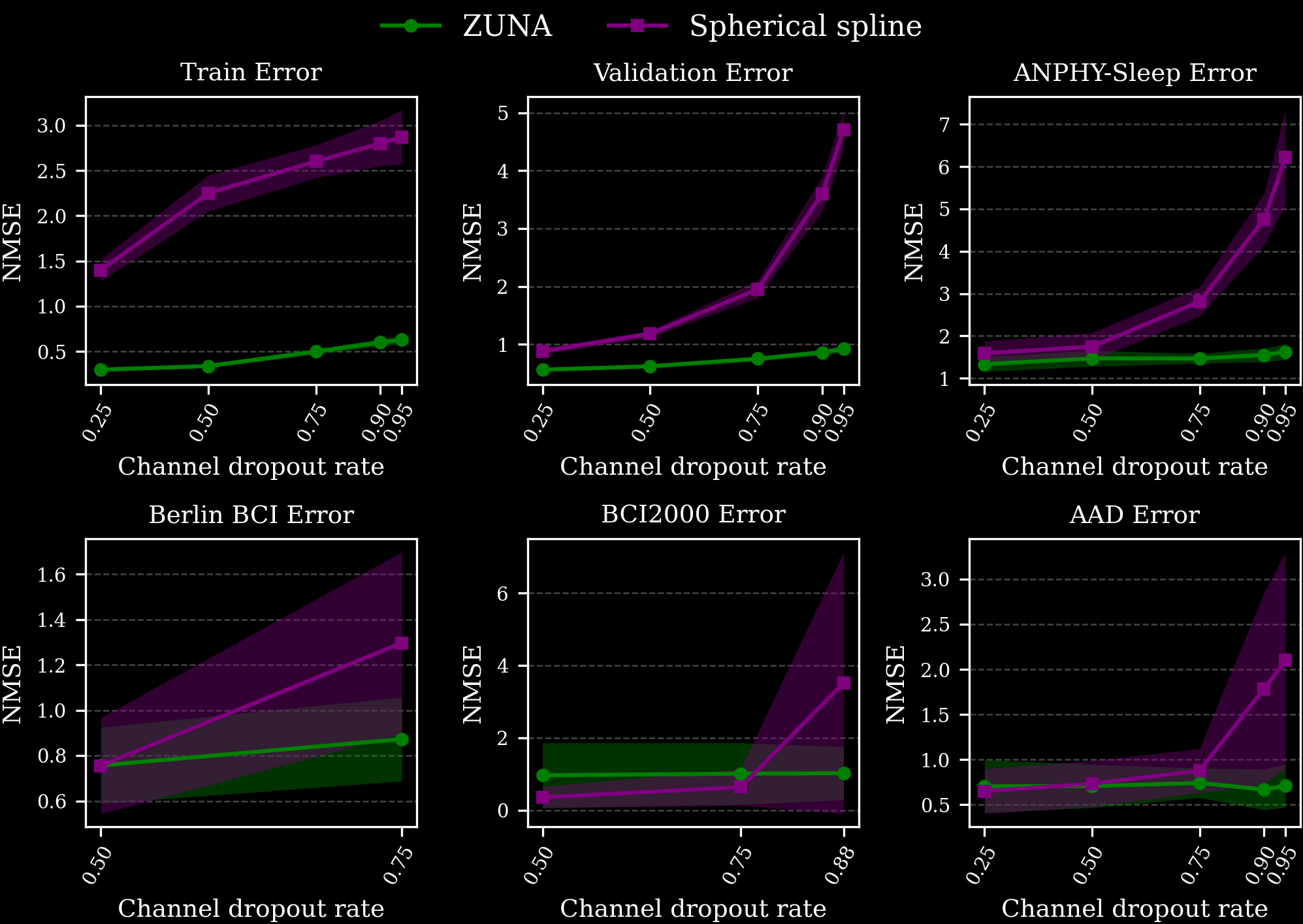

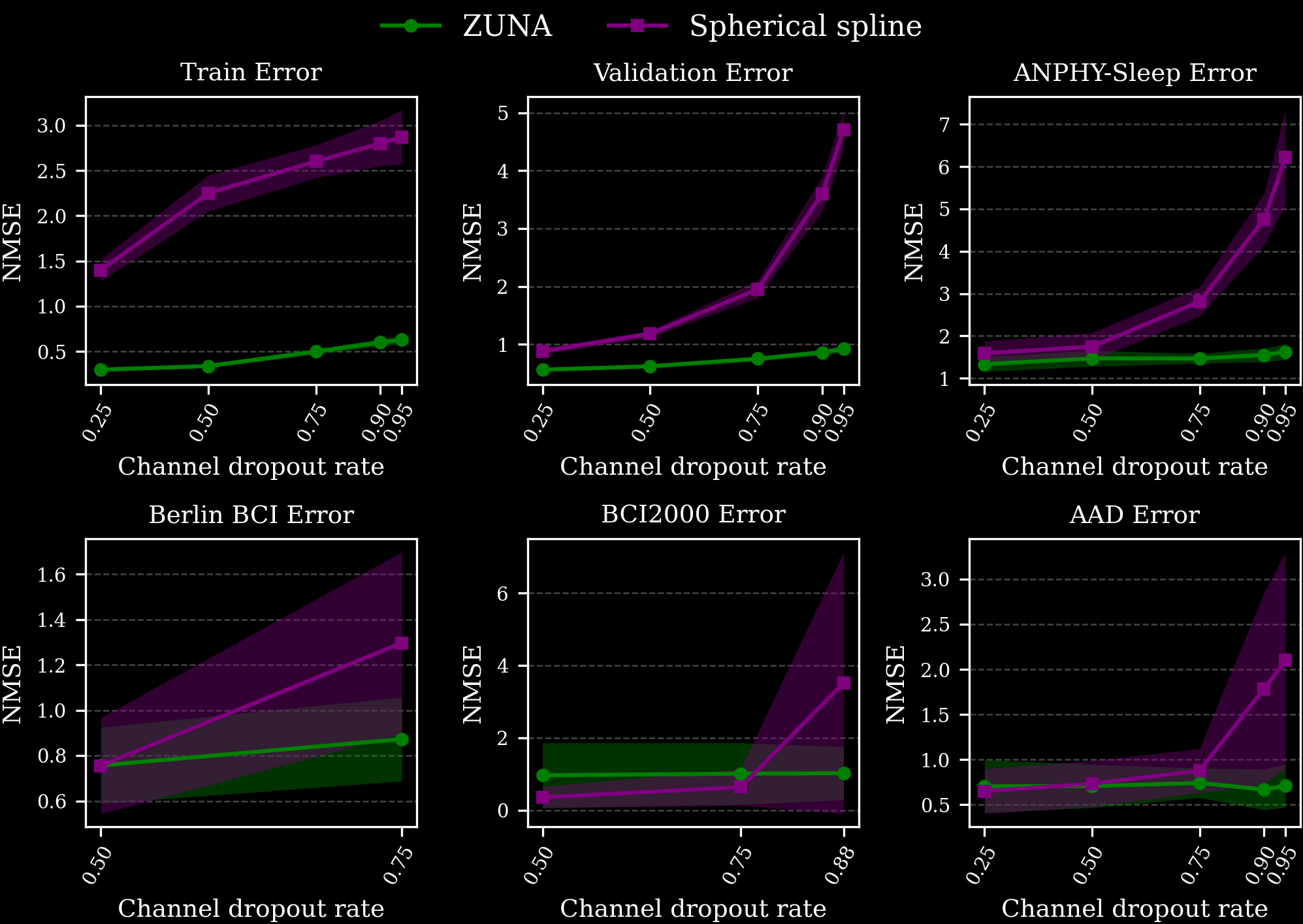

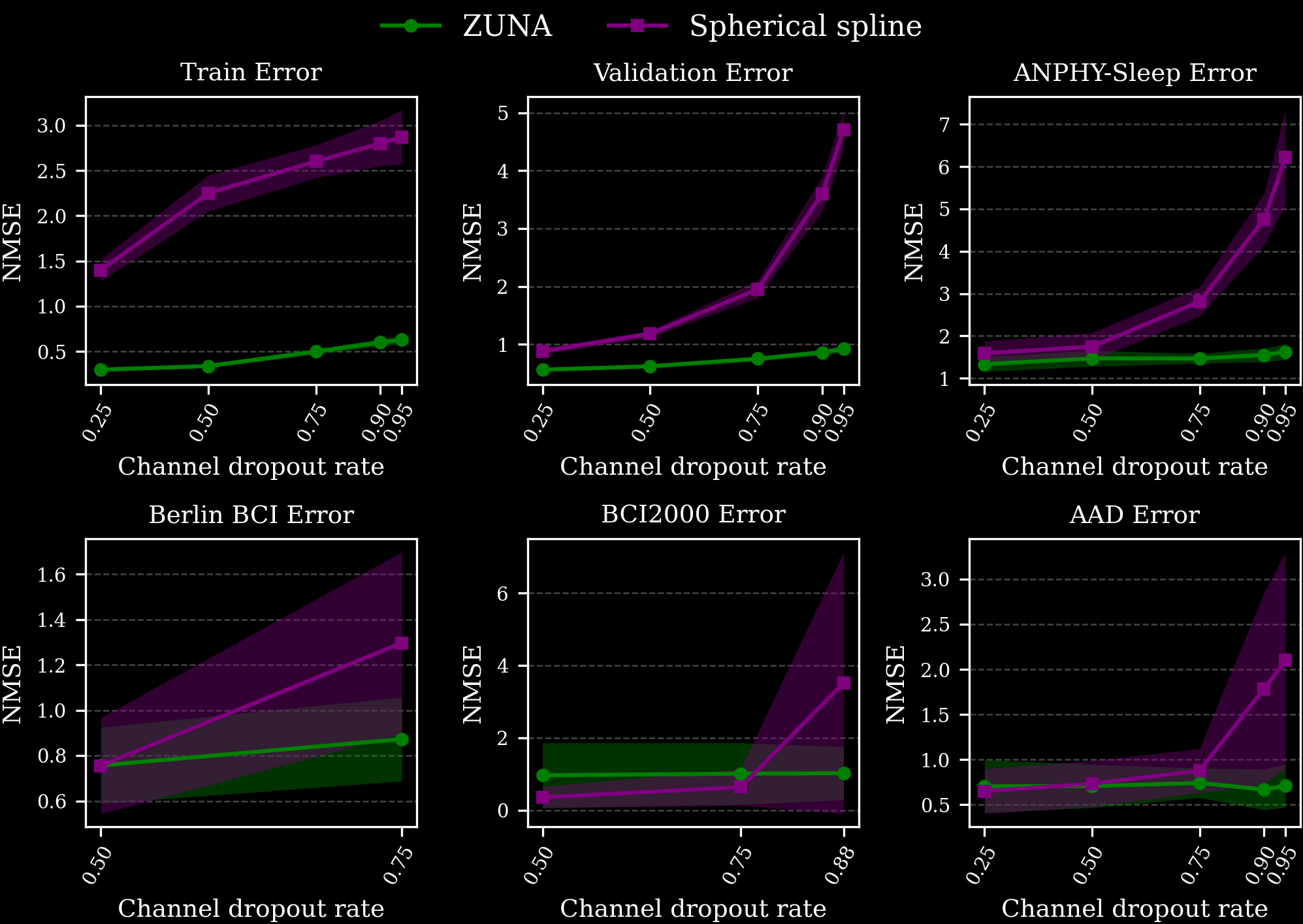

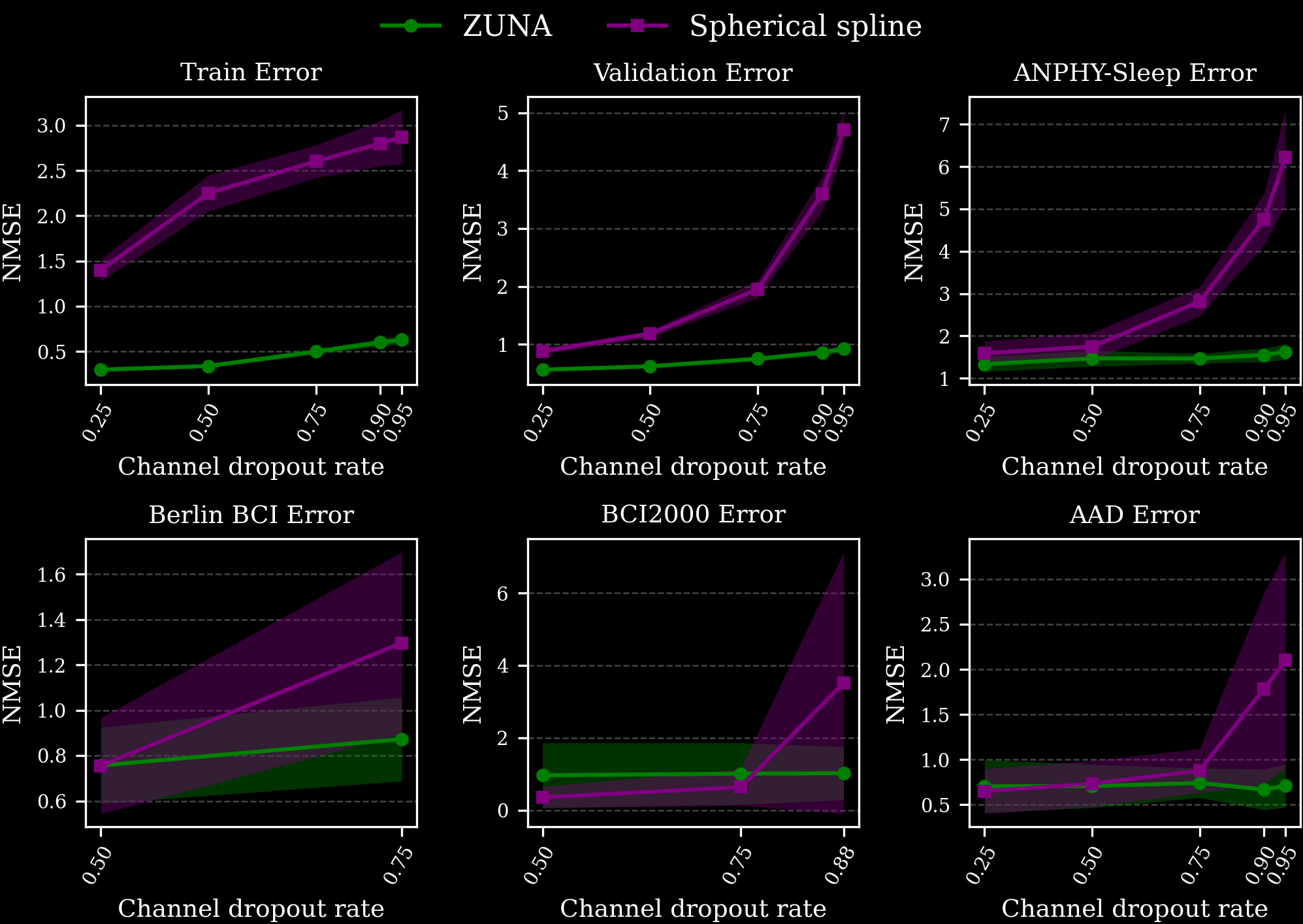

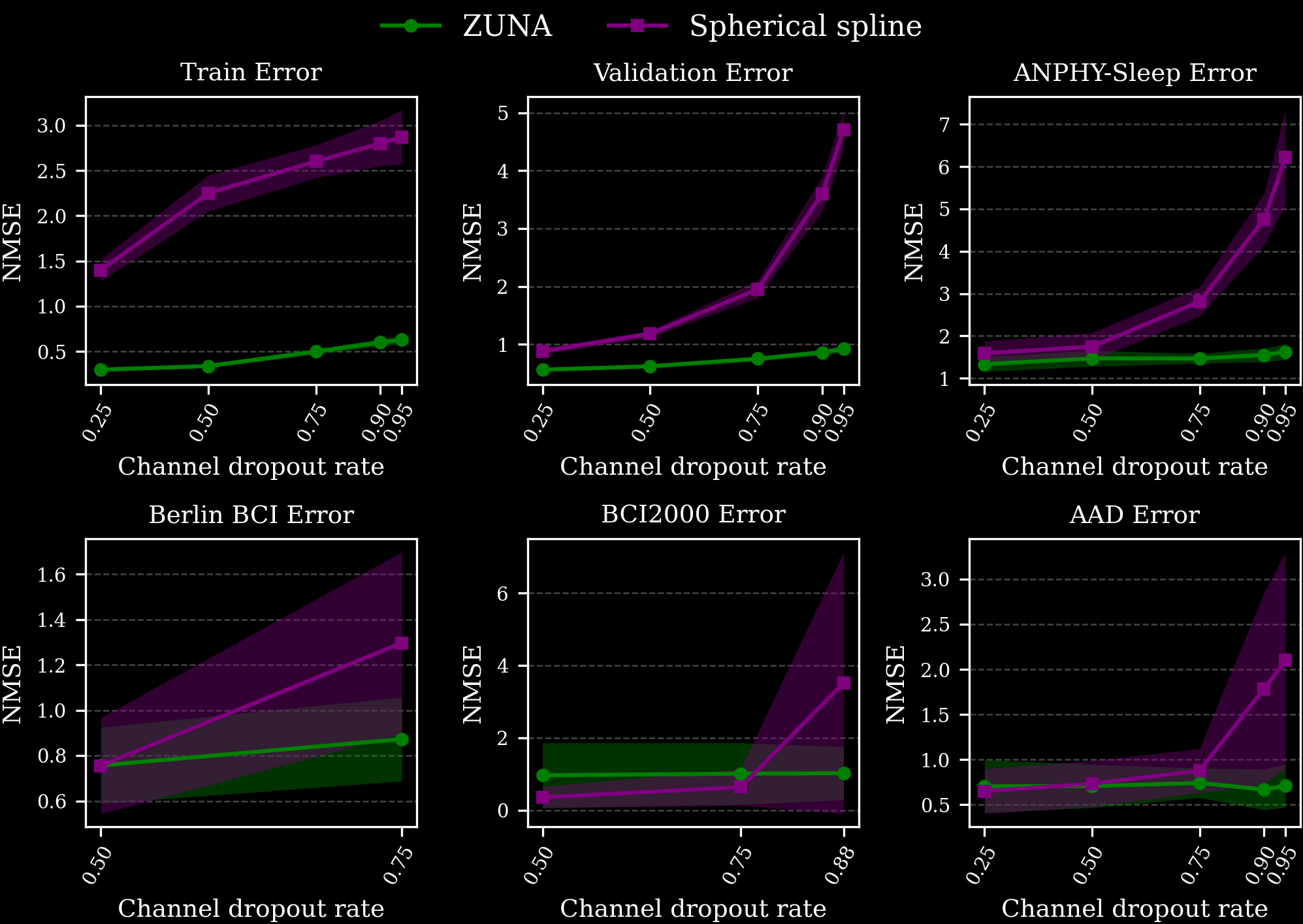

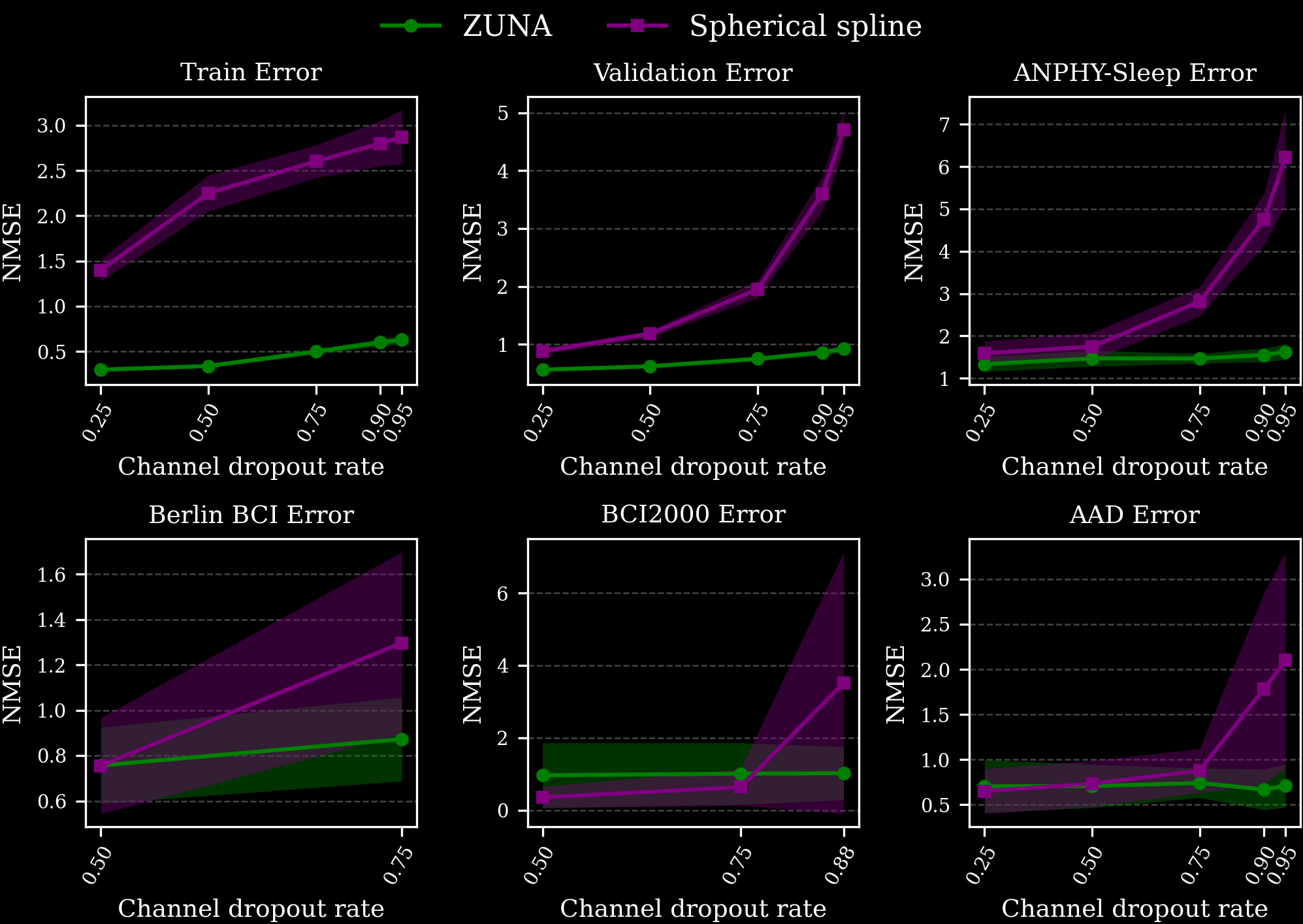

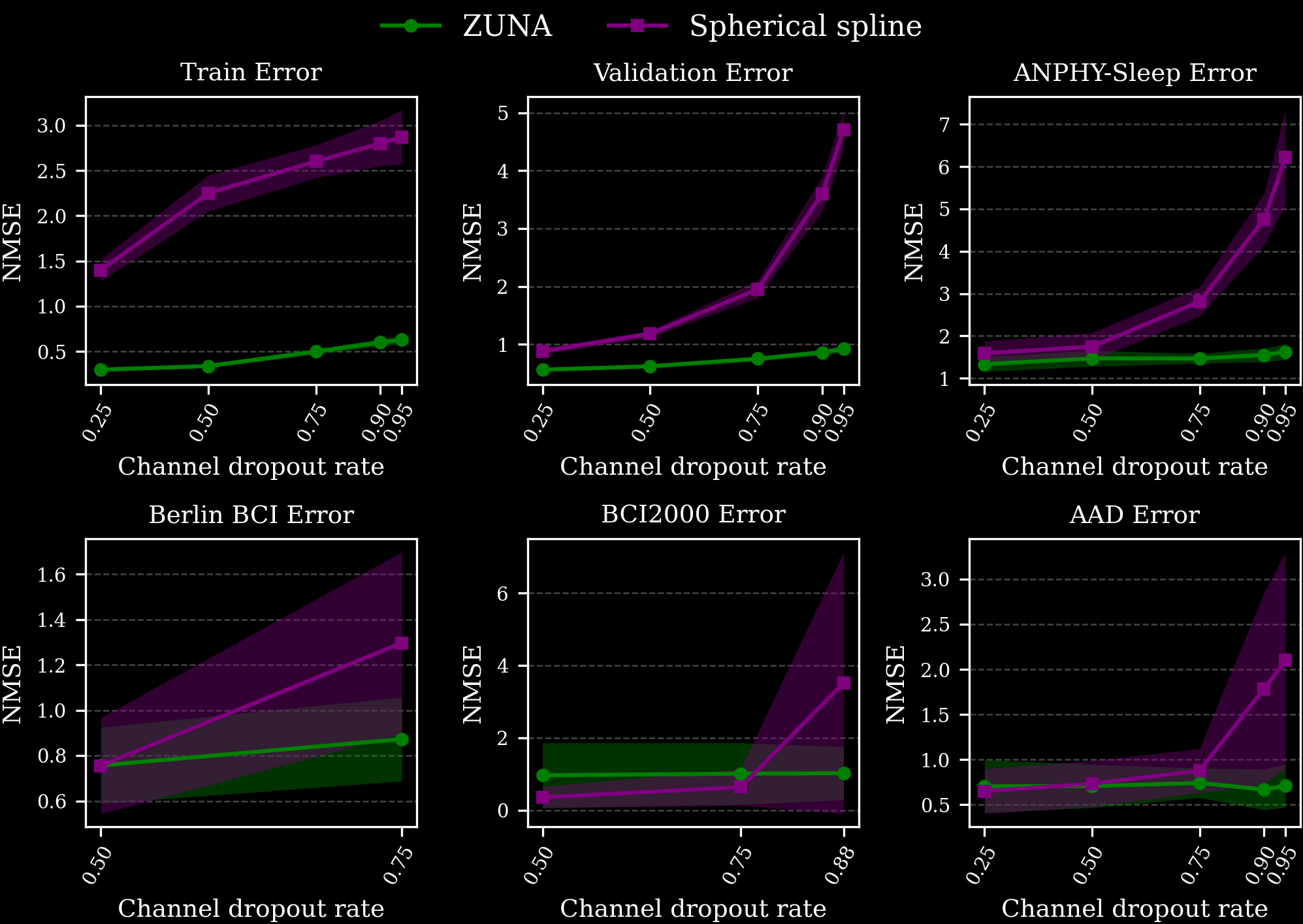

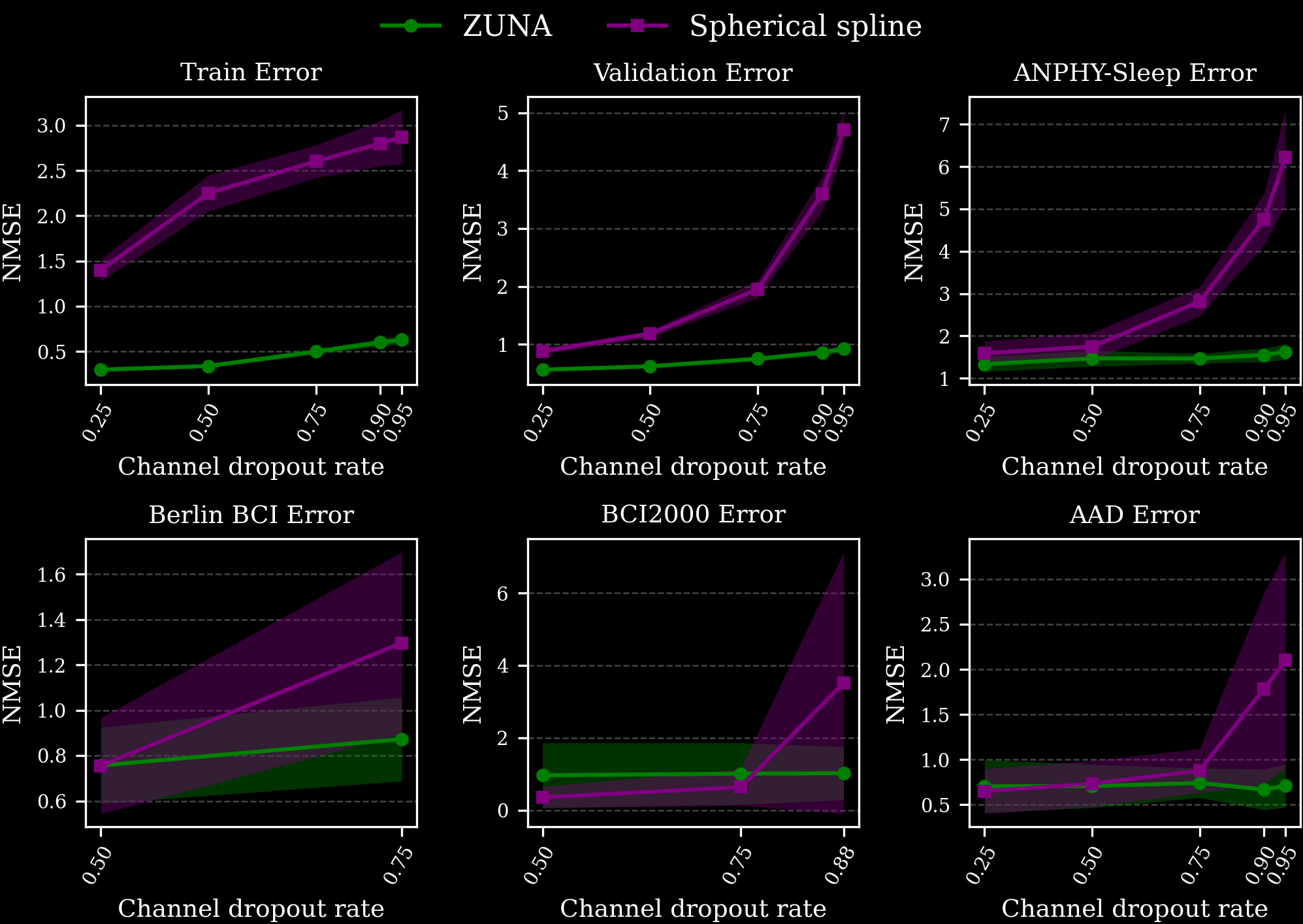

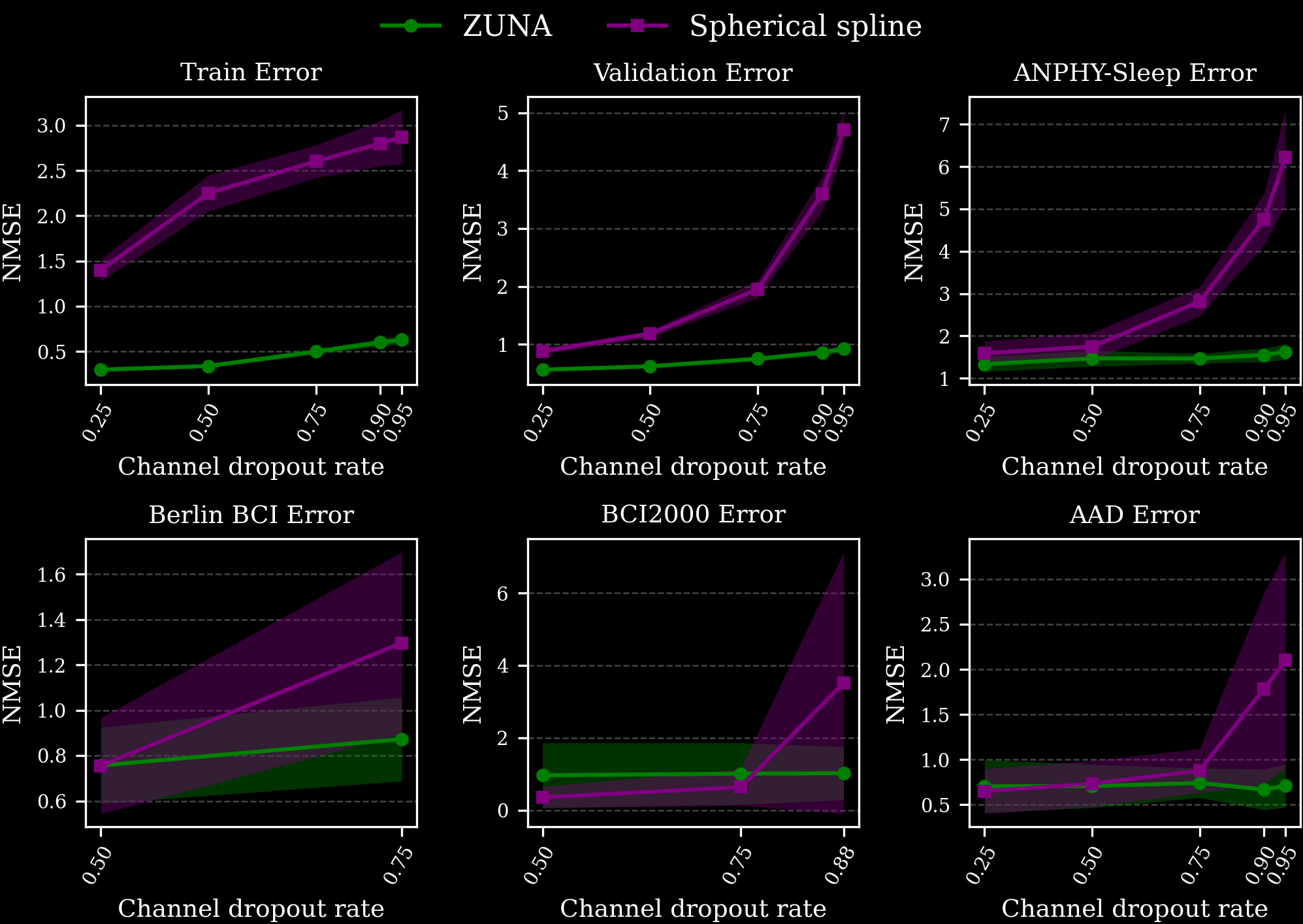

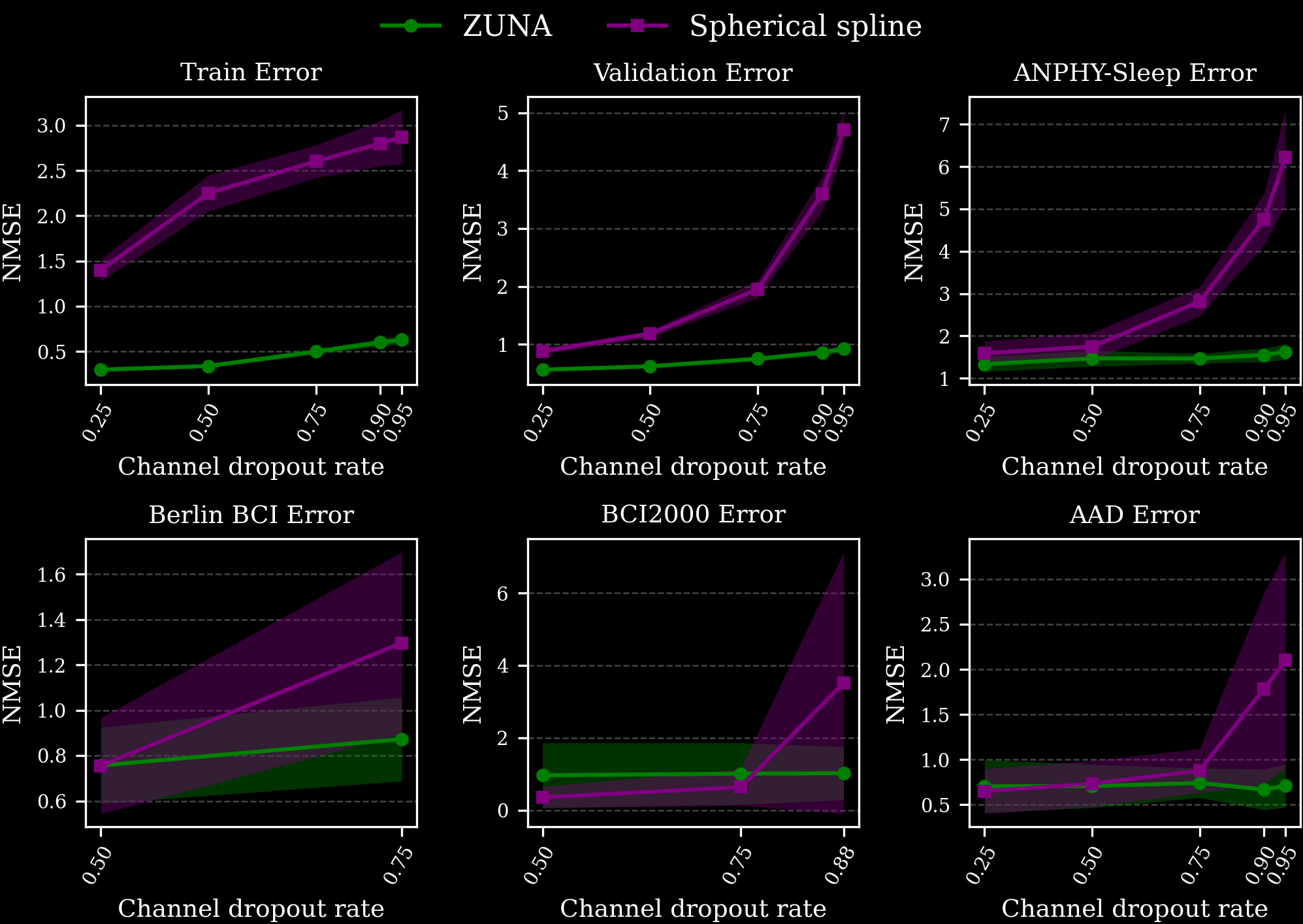

We compared the performance of ZUNA to spherical spline interpolation which is ubiquitously used by EEG researchers and practitioners and is included as the default in the widely-used MNE package. Spherical spline interpolation is a surprisingly robust baseline when relatively small numbers of channels are dropped out, but degrades in performance with increasingly degraded data. We evaluated ZUNA’s performance on a validation set, a portion of our training data the model had not seen, and on several unseen test datasets of different distributions.

We find that across datasets, ZUNA outperforms spherical spline interpolation by a significant margin, with the advantage increasing at high levels of dropout. When dropping more than 75% of channels, ZUNA outperforms spherical spline interpolation across all datasets.

Zyphra is excited to announce ZUNA, our first foundation model trained on brain data. We believe thought-to-text will be the next major modality beyond language, audio, and vision enabled by noninvasive brain–computer interfaces (BCIs).

ZUNA is an early effort to build general foundation models of neural signals that can be used to understand and decode brain states. ZUNA is a key component in our mission to build human-aligned superintelligence. Over time, we see these models forming the foundation of thought-to-text agentic systems.

ZUNA is a 380M-parameter diffusion autoencoder trained to denoise, reconstruct, and upsample scalp-EEG signals. Given a subset of EEG channels, ZUNA can:

EEG data is prevalent in clinics, research labs, and increasingly consumer devices. Yet unlike text, images, or audio, the EEG domain still lacks general and powerful foundation models.

Data fragmentation is a major reason for the lack of EEG foundation models. EEG datasets are typically small, collected under different protocols, and distributed across many institutions. This makes it difficult to aggregate data at the scale that has powered progress in other modalities.

And yet, there is clearly immense information and structure contained in EEG signals, which could power downstream tasks like understanding emotional and attentional states to decoding thoughts and dreams.

We aim to apply the classical deep learning methodology of creating general pretrained foundation models upon this data, with the goal of discovering generalizable representations underlying these signals and developing a foundation model that will translate thought-to-text.

EEG recordings are frequently degraded by channel dropouts, motion-related artifacts, and limited channel counts typical of academic and consumer-grade hardware.

The standard approach for handling missing or noisy channels is spherical spline interpolation, which is the default method in the widely-used MNE package1. While simple and fast, this method has only a surface-level understanding of EEG structure and can result in poor or misleading reconstructions especially as channel degradation increases.

ZUNA replaces spherical spline interpolation with a learned, data-driven approach. By leveraging representations learned across a large and diverse EEG corpus, ZUNA can reconstruct signals in a way that captures the underlying patterns in brain activity rather than simple spatial smoothing.

This is not just exploratory research; it solves concrete, everyday problems faced by anyone working with EEG.

ZUNA is a foundation model purpose-built to address the most persistent and costly limitations in EEG research and development. ZUNA enables the following capabilities:

EEG datasets often contain sessions that are partially unusable due to corrupted channels or intermittent dropout. These sessions are frequently discarded, reducing sample size and statistical power. ZUNA enables researchers to recover usable signals from such recordings, effectively increasing dataset size without additional data collection.

Many modern EEG devices trade spatial resolution, the number of electrodes on the device, for accessibility. ZUNA allows low-channel systems to be mapped into a higher-resolution signal space, narrowing the gap between consumer-grade and lab-grade recordings and enabling analyses that would otherwise be infeasible.

Traditional EEG analysis pipelines assume fixed montages (e.g., 10–20 or 10–10). ZUNA operates directly on electrode coordinates, allowing it to generalize across arbitrary channel counts and layouts. This makes cross-dataset and cross-device analyses substantially easier.

We compared the performance of ZUNA to spherical spline interpolation which is ubiquitously used by EEG researchers and practitioners and is included as the default in the widely-used MNE package. Spherical spline interpolation is a surprisingly robust baseline when relatively small numbers of channels are dropped out, but degrades in performance with increasingly degraded data. We evaluated ZUNA’s performance on a validation set, a portion of our training data the model had not seen, and on several unseen test datasets of different distributions.

We find that across datasets, ZUNA outperforms spherical spline interpolation by a significant margin, with the advantage increasing at high levels of dropout. When dropping more than 75% of channels, ZUNA outperforms spherical spline interpolation across all datasets.

Zyphra is excited to announce ZUNA, our first foundation model trained on brain data. We believe thought-to-text will be the next major modality beyond language, audio, and vision enabled by noninvasive brain–computer interfaces (BCIs).

ZUNA is an early effort to build general foundation models of neural signals that can be used to understand and decode brain states. ZUNA is a key component in our mission to build human-aligned superintelligence. Over time, we see these models forming the foundation of thought-to-text agentic systems.

ZUNA is a 380M-parameter diffusion autoencoder trained to denoise, reconstruct, and upsample scalp-EEG signals. Given a subset of EEG channels, ZUNA can:

EEG data is prevalent in clinics, research labs, and increasingly consumer devices. Yet unlike text, images, or audio, the EEG domain still lacks general and powerful foundation models.

Data fragmentation is a major reason for the lack of EEG foundation models. EEG datasets are typically small, collected under different protocols, and distributed across many institutions. This makes it difficult to aggregate data at the scale that has powered progress in other modalities.

And yet, there is clearly immense information and structure contained in EEG signals, which could power downstream tasks like understanding emotional and attentional states to decoding thoughts and dreams.

We aim to apply the classical deep learning methodology of creating general pretrained foundation models upon this data, with the goal of discovering generalizable representations underlying these signals and developing a foundation model that will translate thought-to-text.

EEG recordings are frequently degraded by channel dropouts, motion-related artifacts, and limited channel counts typical of academic and consumer-grade hardware.

The standard approach for handling missing or noisy channels is spherical spline interpolation, which is the default method in the widely-used MNE package1. While simple and fast, this method has only a surface-level understanding of EEG structure and can result in poor or misleading reconstructions especially as channel degradation increases.

ZUNA replaces spherical spline interpolation with a learned, data-driven approach. By leveraging representations learned across a large and diverse EEG corpus, ZUNA can reconstruct signals in a way that captures the underlying patterns in brain activity rather than simple spatial smoothing.

This is not just exploratory research; it solves concrete, everyday problems faced by anyone working with EEG.

ZUNA is a foundation model purpose-built to address the most persistent and costly limitations in EEG research and development. ZUNA enables the following capabilities:

EEG datasets often contain sessions that are partially unusable due to corrupted channels or intermittent dropout. These sessions are frequently discarded, reducing sample size and statistical power. ZUNA enables researchers to recover usable signals from such recordings, effectively increasing dataset size without additional data collection.

Many modern EEG devices trade spatial resolution, the number of electrodes on the device, for accessibility. ZUNA allows low-channel systems to be mapped into a higher-resolution signal space, narrowing the gap between consumer-grade and lab-grade recordings and enabling analyses that would otherwise be infeasible.

Traditional EEG analysis pipelines assume fixed montages (e.g., 10–20 or 10–10). ZUNA operates directly on electrode coordinates, allowing it to generalize across arbitrary channel counts and layouts. This makes cross-dataset and cross-device analyses substantially easier.

We compared the performance of ZUNA to spherical spline interpolation which is ubiquitously used by EEG researchers and practitioners and is included as the default in the widely-used MNE package. Spherical spline interpolation is a surprisingly robust baseline when relatively small numbers of channels are dropped out, but degrades in performance with increasingly degraded data. We evaluated ZUNA’s performance on a validation set, a portion of our training data the model had not seen, and on several unseen test datasets of different distributions.

Zyphra is excited to announce ZUNA, our first foundation model trained on brain data. We believe thought-to-text will be the next major modality beyond language, audio, and vision enabled by noninvasive brain–computer interfaces (BCIs).

ZUNA is an early effort to build general foundation models of neural signals that can be used to understand and decode brain states. ZUNA is a key component in our mission to build human-aligned superintelligence. Over time, we see these models forming the foundation of thought-to-text agentic systems.

ZUNA is a 380M-parameter diffusion autoencoder trained to denoise, reconstruct, and upsample scalp-EEG signals. Given a subset of EEG channels, ZUNA can:

EEG data is prevalent in clinics, research labs, and increasingly consumer devices. Yet unlike text, images, or audio, the EEG domain still lacks general and powerful foundation models.

Data fragmentation is a major reason for the lack of EEG foundation models. EEG datasets are typically small, collected under different protocols, and distributed across many institutions. This makes it difficult to aggregate data at the scale that has powered progress in other modalities.

And yet, there is clearly immense information and structure contained in EEG signals, which could power downstream tasks like understanding emotional and attentional states to decoding thoughts and dreams.

We aim to apply the classical deep learning methodology of creating general pretrained foundation models upon this data, with the goal of discovering generalizable representations underlying these signals and developing a foundation model that will translate thought-to-text.

EEG recordings are frequently degraded by channel dropouts, motion-related artifacts, and limited channel counts typical of academic and consumer-grade hardware.

The standard approach for handling missing or noisy channels is spherical spline interpolation, which is the default method in the widely-used MNE package1. While simple and fast, this method has only a surface-level understanding of EEG structure and can result in poor or misleading reconstructions especially as channel degradation increases.

ZUNA replaces spherical spline interpolation with a learned, data-driven approach. By leveraging representations learned across a large and diverse EEG corpus, ZUNA can reconstruct signals in a way that captures the underlying patterns in brain activity rather than simple spatial smoothing.

This is not just exploratory research; it solves concrete, everyday problems faced by anyone working with EEG.

ZUNA is a foundation model purpose-built to address the most persistent and costly limitations in EEG research and development. ZUNA enables the following capabilities:

EEG datasets often contain sessions that are partially unusable due to corrupted channels or intermittent dropout. These sessions are frequently discarded, reducing sample size and statistical power. ZUNA enables researchers to recover usable signals from such recordings, effectively increasing dataset size without additional data collection.

Many modern EEG devices trade spatial resolution, the number of electrodes on the device, for accessibility. ZUNA allows low-channel systems to be mapped into a higher-resolution signal space, narrowing the gap between consumer-grade and lab-grade recordings and enabling analyses that would otherwise be infeasible.

Traditional EEG analysis pipelines assume fixed montages (e.g., 10–20 or 10–10). ZUNA operates directly on electrode coordinates, allowing it to generalize across arbitrary channel counts and layouts. This makes cross-dataset and cross-device analyses substantially easier.

We compared the performance of ZUNA to spherical spline interpolation which is ubiquitously used by EEG researchers and practitioners and is included as the default in the widely-used MNE package. Spherical spline interpolation is a surprisingly robust baseline when relatively small numbers of channels are dropped out, but degrades in performance with increasingly degraded data. We evaluated ZUNA’s performance on a validation set, a portion of our training data the model had not seen, and on several unseen test datasets of different distributions.

We find that across datasets, ZUNA outperforms spherical spline interpolation by a significant margin, with the advantage increasing at high levels of dropout. When dropping more than 75% of channels, ZUNA outperforms spherical spline interpolation across all datasets.

ZUNA leverages a diffusion autoencoder architecture based on a transformer backbone. An encoder maps EEG signals to a shared latent space and a decoder reconstructs EEG signals from latents. We trained with a masked reconstruction loss and a heavy dropout scheme, enabling ZUNA to denoise existing channels and predict new ones during inference.

Zyphra is excited to announce ZUNA, our first foundation model trained on brain data. We believe thought-to-text will be the next major modality beyond language, audio, and vision enabled by noninvasive brain–computer interfaces (BCIs).

ZUNA is an early effort to build general foundation models of neural signals that can be used to understand and decode brain states. ZUNA is a key component in our mission to build human-aligned superintelligence. Over time, we see these models forming the foundation of thought-to-text agentic systems.

ZUNA is a 380M-parameter diffusion autoencoder trained to denoise, reconstruct, and upsample scalp-EEG signals. Given a subset of EEG channels, ZUNA can:

EEG data is prevalent in clinics, research labs, and increasingly consumer devices. Yet unlike text, images, or audio, the EEG domain still lacks general and powerful foundation models.

Data fragmentation is a major reason for the lack of EEG foundation models. EEG datasets are typically small, collected under different protocols, and distributed across many institutions. This makes it difficult to aggregate data at the scale that has powered progress in other modalities.

And yet, there is clearly immense information and structure contained in EEG signals, which could power downstream tasks like understanding emotional and attentional states to decoding thoughts and dreams.

We aim to apply the classical deep learning methodology of creating general pretrained foundation models upon this data, with the goal of discovering generalizable representations underlying these signals and developing a foundation model that will translate thought-to-text.

EEG recordings are frequently degraded by channel dropouts, motion-related artifacts, and limited channel counts typical of academic and consumer-grade hardware.

The standard approach for handling missing or noisy channels is spherical spline interpolation, which is the default method in the widely-used MNE package1. While simple and fast, this method has only a surface-level understanding of EEG structure and can result in poor or misleading reconstructions especially as channel degradation increases.

ZUNA replaces spherical spline interpolation with a learned, data-driven approach. By leveraging representations learned across a large and diverse EEG corpus, ZUNA can reconstruct signals in a way that captures the underlying patterns in brain activity rather than simple spatial smoothing.

This is not just exploratory research; it solves concrete, everyday problems faced by anyone working with EEG.

ZUNA is a foundation model purpose-built to address the most persistent and costly limitations in EEG research and development. ZUNA enables the following capabilities:

EEG datasets often contain sessions that are partially unusable due to corrupted channels or intermittent dropout. These sessions are frequently discarded, reducing sample size and statistical power. ZUNA enables researchers to recover usable signals from such recordings, effectively increasing dataset size without additional data collection.

Many modern EEG devices trade spatial resolution, the number of electrodes on the device, for accessibility. ZUNA allows low-channel systems to be mapped into a higher-resolution signal space, narrowing the gap between consumer-grade and lab-grade recordings and enabling analyses that would otherwise be infeasible.

Traditional EEG analysis pipelines assume fixed montages (e.g., 10–20 or 10–10). ZUNA operates directly on electrode coordinates, allowing it to generalize across arbitrary channel counts and layouts. This makes cross-dataset and cross-device analyses substantially easier.

We compared the performance of ZUNA to spherical spline interpolation which is ubiquitously used by EEG researchers and practitioners and is included as the default in the widely-used MNE package. Spherical spline interpolation is a surprisingly robust baseline when relatively small numbers of channels are dropped out, but degrades in performance with increasingly degraded data. We evaluated ZUNA’s performance on a validation set, a portion of our training data the model had not seen, and on several unseen test datasets of different distributions.

We find that across datasets, ZUNA outperforms spherical spline interpolation by a significant margin, with the advantage increasing at high levels of dropout. When dropping more than 75% of channels, ZUNA outperforms spherical spline interpolation across all datasets.

ZUNA leverages a diffusion autoencoder architecture based on a transformer backbone. An encoder maps EEG signals to a shared latent space and a decoder reconstructs EEG signals from latents. We trained with a masked reconstruction loss and a heavy dropout scheme, enabling ZUNA to denoise existing channels and predict new ones during inference.

To handle EEG data, which can contain an arbitrary number of channels in arbitrary positions on the scalp, we introduce two architectural innovations:

To train ZUNA, we curated approximately 2 million channel-hours of EEG data from a wide range of publicly available sources. All data used a standardized preprocessing pipeline to make it suitable for large-scale foundation model training.

We plan to open-source our data and data infrastructure. Large, high-quality public datasets are essential for progress in any deep learning domain, EEG included.

We are releasing ZUNA with a permissive licensing (Apache 2.0) and practical tooling so it can be easily integrated into real-world workflows.

At 380 million parameters, ZUNA is lightweight enough to run quickly on a consumer GPU and can be used on CPU for many workloads.

We hope researchers, clinicians, and builders put ZUNA to work, provide feedback, and help shape the next generation of brain foundation models.

Organizations or researchers interested in collaborating with Zyphra to improve future versions for specific needs or use cases should contact us at bci@zyphra.com.

Disclaimer: This website and related services (“Services”) are provided for research use only and is not intended for use in the diagnosis, cure, mitigation, treatment, or prevention of any disease or health condition. The Services have not been validated for any medical or clinical use. The information provided through the Services is for general informational purposes only and is not a substitute for any professional medical or healthcare advice. We do not warrant that any information provided through the Services is accurate, complete, or useful to you. Any reliance you place on such information is strictly at your own risk.

To train ZUNA, we curated approximately 2 million channel-hours of EEG data from a wide range of publicly available sources. All data used a standardized preprocessing pipeline to make it suitable for large-scale foundation model training.

We plan to open-source our data and data infrastructure. Large, high-quality public datasets are essential for progress in any deep learning domain, EEG included.

Zyphra is excited to announce ZUNA, our first foundation model trained on brain data. We believe thought-to-text will be the next major modality beyond language, audio, and vision enabled by noninvasive brain–computer interfaces (BCIs).

ZUNA is an early effort to build general foundation models of neural signals that can be used to understand and decode brain states. ZUNA is a key component in our mission to build human-aligned superintelligence. Over time, we see these models forming the foundation of thought-to-text agentic systems.

ZUNA is a 380M-parameter diffusion autoencoder trained to denoise, reconstruct, and upsample scalp-EEG signals. Given a subset of EEG channels, ZUNA can:

EEG data is prevalent in clinics, research labs, and increasingly consumer devices. Yet unlike text, images, or audio, the EEG domain still lacks general and powerful foundation models.

Data fragmentation is a major reason for the lack of EEG foundation models. EEG datasets are typically small, collected under different protocols, and distributed across many institutions. This makes it difficult to aggregate data at the scale that has powered progress in other modalities.

And yet, there is clearly immense information and structure contained in EEG signals, which could power downstream tasks like understanding emotional and attentional states to decoding thoughts and dreams.

We aim to apply the classical deep learning methodology of creating general pretrained foundation models upon this data, with the goal of discovering generalizable representations underlying these signals and developing a foundation model that will translate thought-to-text.

EEG recordings are frequently degraded by channel dropouts, motion-related artifacts, and limited channel counts typical of academic and consumer-grade hardware.

The standard approach for handling missing or noisy channels is spherical spline interpolation, which is the default method in the widely-used MNE package1. While simple and fast, this method has only a surface-level understanding of EEG structure and can result in poor or misleading reconstructions especially as channel degradation increases.

ZUNA replaces spherical spline interpolation with a learned, data-driven approach. By leveraging representations learned across a large and diverse EEG corpus, ZUNA can reconstruct signals in a way that captures the underlying patterns in brain activity rather than simple spatial smoothing.

This is not just exploratory research; it solves concrete, everyday problems faced by anyone working with EEG.

ZUNA is a foundation model purpose-built to address the most persistent and costly limitations in EEG research and development. ZUNA enables the following capabilities:

EEG datasets often contain sessions that are partially unusable due to corrupted channels or intermittent dropout. These sessions are frequently discarded, reducing sample size and statistical power. ZUNA enables researchers to recover usable signals from such recordings, effectively increasing dataset size without additional data collection.

Many modern EEG devices trade spatial resolution, the number of electrodes on the device, for accessibility. ZUNA allows low-channel systems to be mapped into a higher-resolution signal space, narrowing the gap between consumer-grade and lab-grade recordings and enabling analyses that would otherwise be infeasible.

Traditional EEG analysis pipelines assume fixed montages (e.g., 10–20 or 10–10). ZUNA operates directly on electrode coordinates, allowing it to generalize across arbitrary channel counts and layouts. This makes cross-dataset and cross-device analyses substantially easier.

We compared the performance of ZUNA to spherical spline interpolation which is ubiquitously used by EEG researchers and practitioners and is included as the default in the widely-used MNE package. Spherical spline interpolation is a surprisingly robust baseline when relatively small numbers of channels are dropped out, but degrades in performance with increasingly degraded data. We evaluated ZUNA’s performance on a validation set, a portion of our training data the model had not seen, and on several unseen test datasets of different distributions.

We find that across datasets, ZUNA outperforms spherical spline interpolation by a significant margin, with the advantage increasing at high levels of dropout. When dropping more than 75% of channels, ZUNA outperforms spherical spline interpolation across all datasets.

ZUNA leverages a diffusion autoencoder architecture based on a transformer backbone. An encoder maps EEG signals to a shared latent space and a decoder reconstructs EEG signals from latents. We trained with a masked reconstruction loss and a heavy dropout scheme, enabling ZUNA to denoise existing channels and predict new ones during inference.

To handle EEG data, which can contain an arbitrary number of channels in arbitrary positions on the scalp, we introduce two architectural innovations:

Disclaimer: This website and related services (“Services”) are provided for research use only and is not intended for use in the diagnosis, cure, mitigation, treatment, or prevention of any disease or health condition. The Services have not been validated for any medical or clinical use. The information provided through the Services is for general informational purposes only and is not a substitute for any professional medical or healthcare advice. We do not warrant that any information provided through the Services is accurate, complete, or useful to you. Any reliance you place on such information is strictly at your own risk.

To train ZUNA, we curated approximately 2 million channel-hours of EEG data from a wide range of publicly available sources. All data used a standardized preprocessing pipeline to make it suitable for large-scale foundation model training.

We plan to open-source our data and data infrastructure. Large, high-quality public datasets are essential for progress in any deep learning domain, EEG included.

Zyphra is excited to announce ZUNA, our first foundation model trained on brain data. We believe thought-to-text will be the next major modality beyond language, audio, and vision enabled by noninvasive brain–computer interfaces (BCIs).

ZUNA is an early effort to build general foundation models of neural signals that can be used to understand and decode brain states. ZUNA is a key component in our mission to build human-aligned superintelligence. Over time, we see these models forming the foundation of thought-to-text agentic systems.

ZUNA is a 380M-parameter diffusion autoencoder trained to denoise, reconstruct, and upsample scalp-EEG signals. Given a subset of EEG channels, ZUNA can:

EEG data is prevalent in clinics, research labs, and increasingly consumer devices. Yet unlike text, images, or audio, the EEG domain still lacks general and powerful foundation models.

Data fragmentation is a major reason for the lack of EEG foundation models. EEG datasets are typically small, collected under different protocols, and distributed across many institutions. This makes it difficult to aggregate data at the scale that has powered progress in other modalities.

And yet, there is clearly immense information and structure contained in EEG signals, which could power downstream tasks like understanding emotional and attentional states to decoding thoughts and dreams.

We aim to apply the classical deep learning methodology of creating general pretrained foundation models upon this data, with the goal of discovering generalizable representations underlying these signals and developing a foundation model that will translate thought-to-text.

Zyphra is excited to announce ZUNA, our first foundation model trained on brain data. We believe thought-to-text will be the next major modality beyond language, audio, and vision enabled by noninvasive brain–computer interfaces (BCIs).

ZUNA is an early effort to build general foundation models of neural signals that can be used to understand and decode brain states. ZUNA is a key component in our mission to build human-aligned superintelligence. Over time, we see these models forming the foundation of thought-to-text agentic systems.

ZUNA is a 380M-parameter diffusion autoencoder trained to denoise, reconstruct, and upsample scalp-EEG signals. Given a subset of EEG channels, ZUNA can:

EEG data is prevalent in clinics, research labs, and increasingly consumer devices. Yet unlike text, images, or audio, the EEG domain still lacks general and powerful foundation models.

Data fragmentation is a major reason for the lack of EEG foundation models. EEG datasets are typically small, collected under different protocols, and distributed across many institutions. This makes it difficult to aggregate data at the scale that has powered progress in other modalities.

And yet, there is clearly immense information and structure contained in EEG signals, which could power downstream tasks like understanding emotional and attentional states to decoding thoughts and dreams.

We aim to apply the classical deep learning methodology of creating general pretrained foundation models upon this data, with the goal of discovering generalizable representations underlying these signals and developing a foundation model that will translate thought-to-text.

EEG recordings are frequently degraded by channel dropouts, motion-related artifacts, and limited channel counts typical of academic and consumer-grade hardware.

The standard approach for handling missing or noisy channels is spherical spline interpolation, which is the default method in the widely-used MNE package1. While simple and fast, this method has only a surface-level understanding of EEG structure and can result in poor or misleading reconstructions especially as channel degradation increases.

ZUNA replaces spherical spline interpolation with a learned, data-driven approach. By leveraging representations learned across a large and diverse EEG corpus, ZUNA can reconstruct signals in a way that captures the underlying patterns in brain activity rather than simple spatial smoothing.

This is not just exploratory research; it solves concrete, everyday problems faced by anyone working with EEG.

ZUNA is a foundation model purpose-built to address the most persistent and costly limitations in EEG research and development. ZUNA enables the following capabilities:

EEG datasets often contain sessions that are partially unusable due to corrupted channels or intermittent dropout. These sessions are frequently discarded, reducing sample size and statistical power. ZUNA enables researchers to recover usable signals from such recordings, effectively increasing dataset size without additional data collection.

Many modern EEG devices trade spatial resolution, the number of electrodes on the device, for accessibility. ZUNA allows low-channel systems to be mapped into a higher-resolution signal space, narrowing the gap between consumer-grade and lab-grade recordings and enabling analyses that would otherwise be infeasible.

Traditional EEG analysis pipelines assume fixed montages (e.g., 10–20 or 10–10). ZUNA operates directly on electrode coordinates, allowing it to generalize across arbitrary channel counts and layouts. This makes cross-dataset and cross-device analyses substantially easier.

We compared the performance of ZUNA to spherical spline interpolation which is ubiquitously used by EEG researchers and practitioners and is included as the default in the widely-used MNE package. Spherical spline interpolation is a surprisingly robust baseline when relatively small numbers of channels are dropped out, but degrades in performance with increasingly degraded data. We evaluated ZUNA’s performance on a validation set, a portion of our training data the model had not seen, and on several unseen test datasets of different distributions.

We find that across datasets, ZUNA outperforms spherical spline interpolation by a significant margin, with the advantage increasing at high levels of dropout. When dropping more than 75% of channels, ZUNA outperforms spherical spline interpolation across all datasets.

ZUNA leverages a diffusion autoencoder architecture based on a transformer backbone. An encoder maps EEG signals to a shared latent space and a decoder reconstructs EEG signals from latents. We trained with a masked reconstruction loss and a heavy dropout scheme, enabling ZUNA to denoise existing channels and predict new ones during inference.

To handle EEG data, which can contain an arbitrary number of channels in arbitrary positions on the scalp, we introduce two architectural innovations:

Zyphra is excited to announce ZUNA, our first foundation model trained on brain data. We believe thought-to-text will be the next major modality beyond language, audio, and vision enabled by noninvasive brain–computer interfaces (BCIs).

ZUNA is an early effort to build general foundation models of neural signals that can be used to understand and decode brain states. ZUNA is a key component in our mission to build human-aligned superintelligence. Over time, we see these models forming the foundation of thought-to-text agentic systems.

ZUNA is a 380M-parameter diffusion autoencoder trained to denoise, reconstruct, and upsample scalp-EEG signals. Given a subset of EEG channels, ZUNA can:

EEG data is prevalent in clinics, research labs, and increasingly consumer devices. Yet unlike text, images, or audio, the EEG domain still lacks general and powerful foundation models.

Data fragmentation is a major reason for the lack of EEG foundation models. EEG datasets are typically small, collected under different protocols, and distributed across many institutions. This makes it difficult to aggregate data at the scale that has powered progress in other modalities.

And yet, there is clearly immense information and structure contained in EEG signals, which could power downstream tasks like understanding emotional and attentional states to decoding thoughts and dreams.

We aim to apply the classical deep learning methodology of creating general pretrained foundation models upon this data, with the goal of discovering generalizable representations underlying these signals and developing a foundation model that will translate thought-to-text.

ZUNA is a foundation model purpose-built to address the most persistent and costly limitations in EEG research and development. ZUNA enables the following capabilities:

EEG datasets often contain sessions that are partially unusable due to corrupted channels or intermittent dropout. These sessions are frequently discarded, reducing sample size and statistical power. ZUNA enables researchers to recover usable signals from such recordings, effectively increasing dataset size without additional data collection.

Many modern EEG devices trade spatial resolution, the number of electrodes on the device, for accessibility. ZUNA allows low-channel systems to be mapped into a higher-resolution signal space, narrowing the gap between consumer-grade and lab-grade recordings and enabling analyses that would otherwise be infeasible.

Traditional EEG analysis pipelines assume fixed montages (e.g., 10–20 or 10–10). ZUNA operates directly on electrode coordinates, allowing it to generalize across arbitrary channel counts and layouts. This makes cross-dataset and cross-device analyses substantially easier.

EEG recordings are frequently degraded by channel dropouts, motion-related artifacts, and limited channel counts typical of academic and consumer-grade hardware.

The standard approach for handling missing or noisy channels is spherical spline interpolation, which is the default method in the widely-used MNE package1. While simple and fast, this method has only a surface-level understanding of EEG structure and can result in poor or misleading reconstructions especially as channel degradation increases.

ZUNA replaces spherical spline interpolation with a learned, data-driven approach. By leveraging representations learned across a large and diverse EEG corpus, ZUNA can reconstruct signals in a way that captures the underlying patterns in brain activity rather than simple spatial smoothing.

This is not just exploratory research; it solves concrete, everyday problems faced by anyone working with EEG.

We compared the performance of ZUNA to spherical spline interpolation which is ubiquitously used by EEG researchers and practitioners and is included as the default in the widely-used MNE package. Spherical spline interpolation is a surprisingly robust baseline when relatively small numbers of channels are dropped out, but degrades in performance with increasingly degraded data. We evaluated ZUNA’s performance on a validation set, a portion of our training data the model had not seen, and on several unseen test datasets of different distributions.

We find that across datasets, ZUNA outperforms spherical spline interpolation by a significant margin, with the advantage increasing at high levels of dropout. When dropping more than 75% of channels, ZUNA outperforms spherical spline interpolation across all datasets.

Zyphra is excited to announce ZUNA, our first foundation model trained on brain data. We believe thought-to-text will be the next major modality beyond language, audio, and vision enabled by noninvasive brain–computer interfaces (BCIs).

ZUNA is an early effort to build general foundation models of neural signals that can be used to understand and decode brain states. ZUNA is a key component in our mission to build human-aligned superintelligence. Over time, we see these models forming the foundation of thought-to-text agentic systems.

ZUNA is a 380M-parameter diffusion autoencoder trained to denoise, reconstruct, and upsample scalp-EEG signals. Given a subset of EEG channels, ZUNA can:

EEG data is prevalent in clinics, research labs, and increasingly consumer devices. Yet unlike text, images, or audio, the EEG domain still lacks general and powerful foundation models.

Data fragmentation is a major reason for the lack of EEG foundation models. EEG datasets are typically small, collected under different protocols, and distributed across many institutions. This makes it difficult to aggregate data at the scale that has powered progress in other modalities.

And yet, there is clearly immense information and structure contained in EEG signals, which could power downstream tasks like understanding emotional and attentional states to decoding thoughts and dreams.

We aim to apply the classical deep learning methodology of creating general pretrained foundation models upon this data, with the goal of discovering generalizable representations underlying these signals and developing a foundation model that will translate thought-to-text.

EEG recordings are frequently degraded by channel dropouts, motion-related artifacts, and limited channel counts typical of academic and consumer-grade hardware.

The standard approach for handling missing or noisy channels is spherical spline interpolation, which is the default method in the widely-used MNE package1. While simple and fast, this method has only a surface-level understanding of EEG structure and can result in poor or misleading reconstructions especially as channel degradation increases.

ZUNA replaces spherical spline interpolation with a learned, data-driven approach. By leveraging representations learned across a large and diverse EEG corpus, ZUNA can reconstruct signals in a way that captures the underlying patterns in brain activity rather than simple spatial smoothing.

This is not just exploratory research; it solves concrete, everyday problems faced by anyone working with EEG.

ZUNA is a foundation model purpose-built to address the most persistent and costly limitations in EEG research and development. ZUNA enables the following capabilities:

EEG datasets often contain sessions that are partially unusable due to corrupted channels or intermittent dropout. These sessions are frequently discarded, reducing sample size and statistical power. ZUNA enables researchers to recover usable signals from such recordings, effectively increasing dataset size without additional data collection.

Many modern EEG devices trade spatial resolution, the number of electrodes on the device, for accessibility. ZUNA allows low-channel systems to be mapped into a higher-resolution signal space, narrowing the gap between consumer-grade and lab-grade recordings and enabling analyses that would otherwise be infeasible.

Traditional EEG analysis pipelines assume fixed montages (e.g., 10–20 or 10–10). ZUNA operates directly on electrode coordinates, allowing it to generalize across arbitrary channel counts and layouts. This makes cross-dataset and cross-device analyses substantially easier.

We compared the performance of ZUNA to spherical spline interpolation which is ubiquitously used by EEG researchers and practitioners and is included as the default in the widely-used MNE package. Spherical spline interpolation is a surprisingly robust baseline when relatively small numbers of channels are dropped out, but degrades in performance with increasingly degraded data. We evaluated ZUNA’s performance on a validation set, a portion of our training data the model had not seen, and on several unseen test datasets of different distributions.

We find that across datasets, ZUNA outperforms spherical spline interpolation by a significant margin, with the advantage increasing at high levels of dropout. When dropping more than 75% of channels, ZUNA outperforms spherical spline interpolation across all datasets.

ZUNA leverages a diffusion autoencoder architecture based on a transformer backbone. An encoder maps EEG signals to a shared latent space and a decoder reconstructs EEG signals from latents. We trained with a masked reconstruction loss and a heavy dropout scheme, enabling ZUNA to denoise existing channels and predict new ones during inference.

Zyphra is excited to announce ZUNA, our first foundation model trained on brain data. We believe thought-to-text will be the next major modality beyond language, audio, and vision enabled by noninvasive brain–computer interfaces (BCIs).

ZUNA is an early effort to build general foundation models of neural signals that can be used to understand and decode brain states. ZUNA is a key component in our mission to build human-aligned superintelligence. Over time, we see these models forming the foundation of thought-to-text agentic systems.

ZUNA is a 380M-parameter diffusion autoencoder trained to denoise, reconstruct, and upsample scalp-EEG signals. Given a subset of EEG channels, ZUNA can:

EEG data is prevalent in clinics, research labs, and increasingly consumer devices. Yet unlike text, images, or audio, the EEG domain still lacks general and powerful foundation models.

Data fragmentation is a major reason for the lack of EEG foundation models. EEG datasets are typically small, collected under different protocols, and distributed across many institutions. This makes it difficult to aggregate data at the scale that has powered progress in other modalities.

And yet, there is clearly immense information and structure contained in EEG signals, which could power downstream tasks like understanding emotional and attentional states to decoding thoughts and dreams.

We aim to apply the classical deep learning methodology of creating general pretrained foundation models upon this data, with the goal of discovering generalizable representations underlying these signals and developing a foundation model that will translate thought-to-text.

EEG recordings are frequently degraded by channel dropouts, motion-related artifacts, and limited channel counts typical of academic and consumer-grade hardware.

The standard approach for handling missing or noisy channels is spherical spline interpolation, which is the default method in the widely-used MNE package1. While simple and fast, this method has only a surface-level understanding of EEG structure and can result in poor or misleading reconstructions especially as channel degradation increases.

ZUNA replaces spherical spline interpolation with a learned, data-driven approach. By leveraging representations learned across a large and diverse EEG corpus, ZUNA can reconstruct signals in a way that captures the underlying patterns in brain activity rather than simple spatial smoothing.

This is not just exploratory research; it solves concrete, everyday problems faced by anyone working with EEG.

ZUNA is a foundation model purpose-built to address the most persistent and costly limitations in EEG research and development. ZUNA enables the following capabilities:

EEG datasets often contain sessions that are partially unusable due to corrupted channels or intermittent dropout. These sessions are frequently discarded, reducing sample size and statistical power. ZUNA enables researchers to recover usable signals from such recordings, effectively increasing dataset size without additional data collection.

Many modern EEG devices trade spatial resolution, the number of electrodes on the device, for accessibility. ZUNA allows low-channel systems to be mapped into a higher-resolution signal space, narrowing the gap between consumer-grade and lab-grade recordings and enabling analyses that would otherwise be infeasible.

Traditional EEG analysis pipelines assume fixed montages (e.g., 10–20 or 10–10). ZUNA operates directly on electrode coordinates, allowing it to generalize across arbitrary channel counts and layouts. This makes cross-dataset and cross-device analyses substantially easier.

We compared the performance of ZUNA to spherical spline interpolation which is ubiquitously used by EEG researchers and practitioners and is included as the default in the widely-used MNE package. Spherical spline interpolation is a surprisingly robust baseline when relatively small numbers of channels are dropped out, but degrades in performance with increasingly degraded data. We evaluated ZUNA’s performance on a validation set, a portion of our training data the model had not seen, and on several unseen test datasets of different distributions.

We find that across datasets, ZUNA outperforms spherical spline interpolation by a significant margin, with the advantage increasing at high levels of dropout. When dropping more than 75% of channels, ZUNA outperforms spherical spline interpolation across all datasets.

ZUNA leverages a diffusion autoencoder architecture based on a transformer backbone. An encoder maps EEG signals to a shared latent space and a decoder reconstructs EEG signals from latents. We trained with a masked reconstruction loss and a heavy dropout scheme, enabling ZUNA to denoise existing channels and predict new ones during inference.

To handle EEG data, which can contain an arbitrary number of channels in arbitrary positions on the scalp, we introduce two architectural innovations:

To train ZUNA, we curated approximately 2 million channel-hours of EEG data from a wide range of publicly available sources. All data used a standardized preprocessing pipeline to make it suitable for large-scale foundation model training.

We plan to open-source our data and data infrastructure. Large, high-quality public datasets are essential for progress in any deep learning domain, EEG included.

We are releasing ZUNA with a permissive licensing (Apache 2.0) and practical tooling so it can be easily integrated into real-world workflows.

At 380 million parameters, ZUNA is lightweight enough to run quickly on a consumer GPU and can be used on CPU for many workloads.

We hope researchers, clinicians, and builders put ZUNA to work, provide feedback, and help shape the next generation of brain foundation models.

Organizations or researchers interested in collaborating with Zyphra to improve future versions for specific needs or use cases should contact us at bci@zyphra.com.

Disclaimer: This website and related services (“Services”) are provided for research use only and is not intended for use in the diagnosis, cure, mitigation, treatment, or prevention of any disease or health condition. The Services have not been validated for any medical or clinical use. The information provided through the Services is for general informational purposes only and is not a substitute for any professional medical or healthcare advice. We do not warrant that any information provided through the Services is accurate, complete, or useful to you. Any reliance you place on such information is strictly at your own risk.

We present histograms depicting distribution of cluster sizes in all the datasets (see Fig. 7-11). Please, note that all the figures are in log-log scale. We see a significant drop in the number of clusters starting from the size of around 100. This drop is present both in DCLM and FineWeb-Edu2 (see Fig. 8 and 9 respectively), and most likely is explained by a combination of the deduplication strategy and quality when creating both datasets: DCLM deduplication was done individually within 10 shards, while FineWeb-Edu2 was deduplicated within every Common Crawl snapshot. We find that large clusters usually contain low quality material (repeated advertisements, license agreements templates, etc), so it’s not surprising that such documents were removed. Notably, DCLM still contained one cluster with the size close to 1 million documents, containing low quality documents seemingly coming from the advertisements (see Appendix).We find both Zyda-1and Dolma-CC contain a small amount of duplicates, which is expected, since both datasets were deduplicated globally by their authors. Remaining duplicates are likely false negatives from the initial deduplication procedure. Note, that distribution of duplicates clusters sizes of these two datasets (Fig. 10 and 11) don’t contain any sharp drops, but rather hyper exponentially decreases with cluster size.

Below is an example of the document from the largest cluster (~1M documents) of duplicates in DCLM (quality score 0.482627):

Is safe? Is scam?

Is safe for your PC?

Is safe or is it scam?

Domain is SafeSafe score: 1

The higher the number, the more dangerous the website.Any number higher than 1 means DANGER.

Positive votes:

Negative votes:

Vote Up Vote Down review

Have you had bad experience with Warn us, please!

Below one will find a few documents with different quality scores from DCLM coming from the same duplicates cluster. Quality score varies from ~0.2 to ~0.04.

Zyphra is excited to announce ZUNA, our first foundation model trained on brain data. We believe thought-to-text will be the next major modality beyond language, audio, and vision enabled by noninvasive brain–computer interfaces (BCIs).

ZUNA is an early effort to build general foundation models of neural signals that can be used to understand and decode brain states. ZUNA is a key component in our mission to build human-aligned superintelligence. Over time, we see these models forming the foundation of thought-to-text agentic systems.

Reported scores underlined.

Pass@1 scores with greedy sampling.

ZUNA is a 380M-parameter diffusion autoencoder trained to denoise, reconstruct, and upsample scalp-EEG signals. Given a subset of EEG channels, ZUNA can:

Pass@1 scores with greedy sampling. Livebench 2024-11-25.

Bold: Best score at 1.5B scale w/ greedy sampling

*reported scores

EEG data is prevalent in clinics, research labs, and increasingly consumer devices. Yet unlike text, images, or audio, the EEG domain still lacks general and powerful foundation models.

Data fragmentation is a major reason for the lack of EEG foundation models. EEG datasets are typically small, collected under different protocols, and distributed across many institutions. This makes it difficult to aggregate data at the scale that has powered progress in other modalities.

And yet, there is clearly immense information and structure contained in EEG signals, which could power downstream tasks like understanding emotional and attentional states to decoding thoughts and dreams.

We aim to apply the classical deep learning methodology of creating general pretrained foundation models upon this data, with the goal of discovering generalizable representations underlying these signals and developing a foundation model that will translate thought-to-text.

Evals (reported underlined). All numbers pass@1 estimated using n=16

EEG recordings are frequently degraded by channel dropouts, motion-related artifacts, and limited channel counts typical of academic and consumer-grade hardware.

The standard approach for handling missing or noisy channels is spherical spline interpolation, which is the default method in the widely-used MNE package1. While simple and fast, this method has only a surface-level understanding of EEG structure and can result in poor or misleading reconstructions especially as channel degradation increases.

ZUNA replaces spherical spline interpolation with a learned, data-driven approach. By leveraging representations learned across a large and diverse EEG corpus, ZUNA can reconstruct signals in a way that captures the underlying patterns in brain activity rather than simple spatial smoothing.

This is not just exploratory research; it solves concrete, everyday problems faced by anyone working with EEG.

ZUNA is a foundation model purpose-built to address the most persistent and costly limitations in EEG research and development. ZUNA enables the following capabilities:

EEG datasets often contain sessions that are partially unusable due to corrupted channels or intermittent dropout. These sessions are frequently discarded, reducing sample size and statistical power. ZUNA enables researchers to recover usable signals from such recordings, effectively increasing dataset size without additional data collection.

Many modern EEG devices trade spatial resolution, the number of electrodes on the device, for accessibility. ZUNA allows low-channel systems to be mapped into a higher-resolution signal space, narrowing the gap between consumer-grade and lab-grade recordings and enabling analyses that would otherwise be infeasible.

Traditional EEG analysis pipelines assume fixed montages (e.g., 10–20 or 10–10). ZUNA operates directly on electrode coordinates, allowing it to generalize across arbitrary channel counts and layouts. This makes cross-dataset and cross-device analyses substantially easier.

We compared the performance of ZUNA to spherical spline interpolation which is ubiquitously used by EEG researchers and practitioners and is included as the default in the widely-used MNE package. Spherical spline interpolation is a surprisingly robust baseline when relatively small numbers of channels are dropped out, but degrades in performance with increasingly degraded data. We evaluated ZUNA’s performance on a validation set, a portion of our training data the model had not seen, and on several unseen test datasets of different distributions.

We find that across datasets, ZUNA outperforms spherical spline interpolation by a significant margin, with the advantage increasing at high levels of dropout. When dropping more than 75% of channels, ZUNA outperforms spherical spline interpolation across all datasets.

ZUNA leverages a diffusion autoencoder architecture based on a transformer backbone. An encoder maps EEG signals to a shared latent space and a decoder reconstructs EEG signals from latents. We trained with a masked reconstruction loss and a heavy dropout scheme, enabling ZUNA to denoise existing channels and predict new ones during inference.

Footnote: Training on the Eurus-2-RL dataset did not match the DeepScaleR math evaluation numbers, possibly due to lower quality synthetic math questions in NuminaMath-CoT providing a mixed training signal, or the solvability filtering process with QwQ-preview reducing the difficulty of the dataset. Additionally, the relatively small percentage of code (5%) likely led to math dominating training at the expense of code performance. Training on domain specific datasets and merging resulting models seems to be a potential way to counteract this problem, as demonstrated with SFT in Light-R1.

To handle EEG data, which can contain an arbitrary number of channels in arbitrary positions on the scalp, we introduce two architectural innovations:

Zyphra is excited to announce ZUNA, our first foundation model trained on brain data. We believe thought-to-text will be the next major modality beyond language, audio, and vision enabled by noninvasive brain–computer interfaces (BCIs).

ZUNA is an early effort to build general foundation models of neural signals that can be used to understand and decode brain states. ZUNA is a key component in our mission to build human-aligned superintelligence. Over time, we see these models forming the foundation of thought-to-text agentic systems.

ZUNA is a 380M-parameter diffusion autoencoder trained to denoise, reconstruct, and upsample scalp-EEG signals. Given a subset of EEG channels, ZUNA can:

EEG data is prevalent in clinics, research labs, and increasingly consumer devices. Yet unlike text, images, or audio, the EEG domain still lacks general and powerful foundation models.

Data fragmentation is a major reason for the lack of EEG foundation models. EEG datasets are typically small, collected under different protocols, and distributed across many institutions. This makes it difficult to aggregate data at the scale that has powered progress in other modalities.

And yet, there is clearly immense information and structure contained in EEG signals, which could power downstream tasks like understanding emotional and attentional states to decoding thoughts and dreams.

We aim to apply the classical deep learning methodology of creating general pretrained foundation models upon this data, with the goal of discovering generalizable representations underlying these signals and developing a foundation model that will translate thought-to-text.

EEG recordings are frequently degraded by channel dropouts, motion-related artifacts, and limited channel counts typical of academic and consumer-grade hardware.

The standard approach for handling missing or noisy channels is spherical spline interpolation, which is the default method in the widely-used MNE package1. While simple and fast, this method has only a surface-level understanding of EEG structure and can result in poor or misleading reconstructions especially as channel degradation increases.

ZUNA replaces spherical spline interpolation with a learned, data-driven approach. By leveraging representations learned across a large and diverse EEG corpus, ZUNA can reconstruct signals in a way that captures the underlying patterns in brain activity rather than simple spatial smoothing.

This is not just exploratory research; it solves concrete, everyday problems faced by anyone working with EEG.

ZUNA is a foundation model purpose-built to address the most persistent and costly limitations in EEG research and development. ZUNA enables the following capabilities:

EEG datasets often contain sessions that are partially unusable due to corrupted channels or intermittent dropout. These sessions are frequently discarded, reducing sample size and statistical power. ZUNA enables researchers to recover usable signals from such recordings, effectively increasing dataset size without additional data collection.

Many modern EEG devices trade spatial resolution, the number of electrodes on the device, for accessibility. ZUNA allows low-channel systems to be mapped into a higher-resolution signal space, narrowing the gap between consumer-grade and lab-grade recordings and enabling analyses that would otherwise be infeasible.

Traditional EEG analysis pipelines assume fixed montages (e.g., 10–20 or 10–10). ZUNA operates directly on electrode coordinates, allowing it to generalize across arbitrary channel counts and layouts. This makes cross-dataset and cross-device analyses substantially easier.

We compared the performance of ZUNA to spherical spline interpolation which is ubiquitously used by EEG researchers and practitioners and is included as the default in the widely-used MNE package. Spherical spline interpolation is a surprisingly robust baseline when relatively small numbers of channels are dropped out, but degrades in performance with increasingly degraded data. We evaluated ZUNA’s performance on a validation set, a portion of our training data the model had not seen, and on several unseen test datasets of different distributions.

We find that across datasets, ZUNA outperforms spherical spline interpolation by a significant margin, with the advantage increasing at high levels of dropout. When dropping more than 75% of channels, ZUNA outperforms spherical spline interpolation across all datasets.

ZUNA leverages a diffusion autoencoder architecture based on a transformer backbone. An encoder maps EEG signals to a shared latent space and a decoder reconstructs EEG signals from latents. We trained with a masked reconstruction loss and a heavy dropout scheme, enabling ZUNA to denoise existing channels and predict new ones during inference.

Zyphra is excited to announce ZUNA, our first foundation model trained on brain data. We believe thought-to-text will be the next major modality beyond language, audio, and vision enabled by noninvasive brain–computer interfaces (BCIs).

ZUNA is an early effort to build general foundation models of neural signals that can be used to understand and decode brain states. ZUNA is a key component in our mission to build human-aligned superintelligence. Over time, we see these models forming the foundation of thought-to-text agentic systems.

ZUNA is a 380M-parameter diffusion autoencoder trained to denoise, reconstruct, and upsample scalp-EEG signals. Given a subset of EEG channels, ZUNA can:

EEG data is prevalent in clinics, research labs, and increasingly consumer devices. Yet unlike text, images, or audio, the EEG domain still lacks general and powerful foundation models.